Avatar robot – an AMR for telepresence experiences

Creating professional service robots that deliver business value requires finding common ground between many technological worlds, which can be a challenge. These include hardware and robotic components, embedded systems, computer vision, cloud services, and end-user applications. We built the Avatar robot as part of our internal R&D to prove our robotics expertise.

What – or rather “who” – is the Avatar robot?

Avatar is an autonomous mobile robot (abbreviated to AMR) that moves in indoor spaces. The device recognises the surroundings, moves freely around the specified area, and targets and identifies nearby objects. The main idea of telepresence robots is to enable users to interact remotely with a distant environment.

Avatar robot use case – remote showroom tour

Spyrosoft’s Szczecin office has a showroom with demos of robotics and HMI initiatives. Clients can view our R&D devices remotely through Avatar without actual human presence in the room.

The user picks an available time slot in the tour calendar. Then, they launch a demo remotely via a mobile application. The Avatar robot powers up, greets the user, and gets to work. First, the robot scans the room and creates a map to move around freely, avoiding obstacles.

The device operates using the wall-follower algorithm. The choice of the navigation method has been thought out, as devices in the showroom are located against the wall. But sometimes, a demo is situated in another corner or isn’t in the room, as it is, for example, being presented at an industry event. Therefore, Avatar doesn’t have a rigid map, but with every new session, it examines the surroundings.

The client follows along with the robot in the remote location thanks to the camera video streaming. In parallel, the computer vision functionality starts. The robot approaches every R&D demo, recognises and bookmarks it. Avatar robot moves from one device to another, and after circling the entire room, it returns to the starting position.

The user can control the Avatar in the app. They select a project they want to know more about. Our mobile robot pulls up in front of the demo so that the client can take a closer look. At the same time, information about the R&D initiative is displayed, including a description, photographs or video of the product, and an overview of technologies and architecture. When the remote tour ends, the robot says goodbye, and the client receives an email with a set of resources about the project that caught their attention.

Why did we create the autonomous mobile robot?

By running the Avatar robotics project, we wanted to demonstrate that we can create solutions from the concept by going through all the system development phases, from business and system requirements to software architecture and design, implementation, and testing.

The Avatar robot was created as part of the Dojo so we could hone our technical skills as a team.

About Dojo – Spyrosoft’s purpose-based R&D

Dojo is a space where we create projects that are dictated by business and technology needs. We carry out initiatives that arise from market demands. This is also interesting for Spyrosoft employees and boost their competencies. As a result, we are able to prepare ourselves for ambitious client projects. We often present the results of our work within the Dojo at conferences or industry events.

The Dojo is not just about completing an initiative but about the knowledge our team members acquire during the process so that later, both sides – the client and the employee – feel confident working on a commercial product. While building Avatar, several people deepened their knowledge of ROS or learned about computer vision. Some of them have already moved from R&D to a “regular” robotics project. Others elevate their skills while working to develop the product.

Marieta Węglicka,

CTO at Spyrosoft Synergy, Head of Dojo Vision Crew

The technology behind the Avatar robot

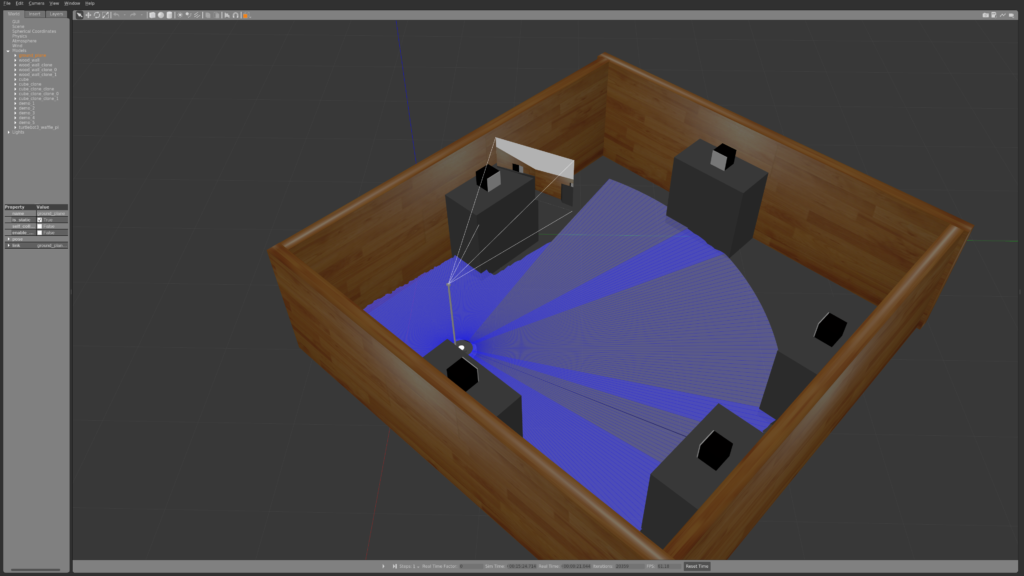

The robotic Avatar system is based on ROS2. It is designed to work in a previously unknown area, automatically discovering the map and searching for given artefacts.

To automatically learn the topology of the space, we’ve learnt and integrated lidar-based SLAM algorithms – implemented within the ROS2 planning package – Navigation2. Based on Navigation2, we needed to implement a real-time exploration package using topology discovering algorithms. Topology algorithms are prepared to work with both large and small workspaces because of their ability to process the map into subareas like GIS algorithms. This approach gives both performance and locality of the directions in which the robot will explore.

Avatar is also prepared to detect the artefacts, in this case, other Spyrosoft projects, including small MCU-connected screens all the way up to enormous automotive-based IVIs. We’ve designed computer vision systems powered by machine learning algorithms to achieve this goal. All the above is created as a distributed system that could be tested on a simulated environment like Gazebo.

Cross-domain cooperation

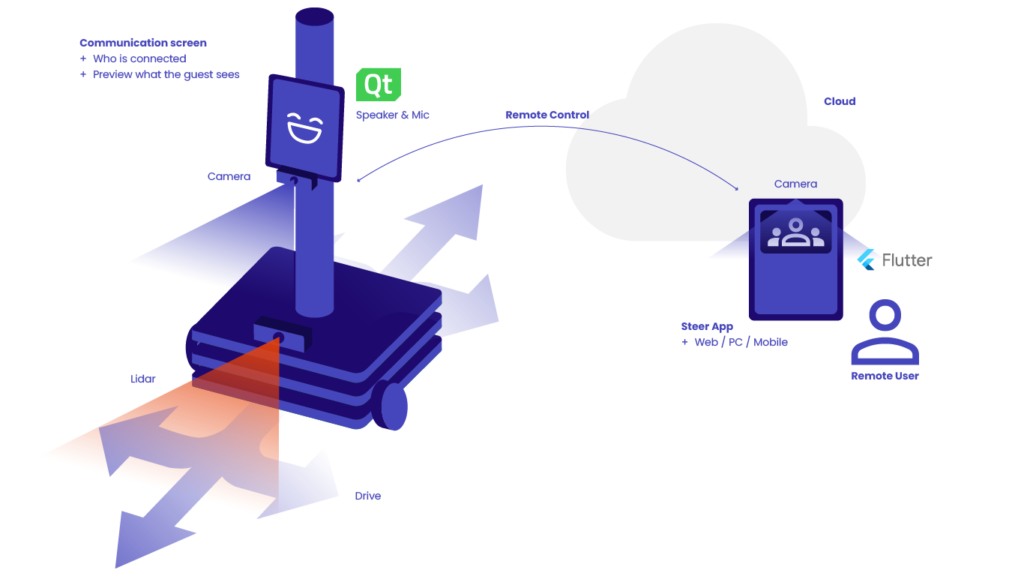

Avatar is an end-to-end project. It combines several technology domains:

- selection of hardware and components and building a robot,

- development of embedded software that includes surrounding recognition and mapping, audio and video streaming, or object detection with computer vision,

- design and implementation of an end-user application to control the robot and experience the remote environment,

- communication architecture between the robot and the application, with cloud services in the middle.

The greatest challenge was establishing cross-functional cooperation. At first, there was friction because it took a lot of work for specialists from different expertise fields to find common ground. The further we went into the initiative, the more their domains overlapped. After a while, the cooperation ran like a well-oiled machine, and Avatar was coming to life. We have blazed the trail, upskilled as individuals but also as a team, and now we feel more confident than ever in taking on extensive projects.

Marieta Węglicka,

CTO at Spyrosoft Synergy, Head of Dojo Vision Crew

At the company, we have cloud engineers, software developers, computer vision experts, and people familiar with hardware and robot building. But to build something that is stable in real-time and performs well, we had to put a lot of emphasis on the spaces between domains and make there’s enough understanding. Otherwise, we’d have a kind of a solution – applications running in silos, inconvenient to merge, not to mention the system’s optimisation.

This project required technological areas to cross over from day zero. Everyone involved – cloud engineers or computer vision programmers – got hands-on ROS, even though only embedded software developers use the platform on a daily basis. It worked the other way around: embedded specialists learned about the cloud and were introducing changes in the AWS services to see how they affect app performance.

Where can you meet Avatar?

The deadline for every Dojo initiative is set by a tech summit during which we present the results of our R&D work. Avatar is our first such extensive project and will play a starring role in our robotic debut. Like the robot, we currently scan the market to recognise events where we can find common ground with other industry enthusiasts.

Right now, we can tell you that Avatar will participate in the Pittsburgh Robotics Network summit this autumn.

See you there!

Build your autonomous mobile robot with us

Are you looking for a company to which you can entrust your AMR project? Here you can find more about the scope of our professional robotics services and how we bring ideas to life.

About the author

Contact us

Transform your industry with the help of professional robotics

Our blog

![[bloghero-thumbnail] ros on nvidia jetson board](https://spyro-soft.com/wp-content/uploads/2023/07/bloghero-thumbnail-ros-on-nvidia-jetson-board.jpg)