Not every product needs AI – here’s how to spot the ones that do

AI is everywhere – but not every product or workflow needs it. Despite the constant hype, adoption often feels slower than expected. Maybe you haven’t found a strong use case for your organisation yet? Or perhaps you’ve tried to implement AI, only to see the initiative fail.

This article offers a strategic lens for finding real AI opportunities. Rather than providing a checklist of use cases, it shares frameworks and ways of thinking to help you cut through the noise, prioritise wisely, and build lasting momentum.

The AI paradox

Leaders face a clear challenge: how do you tell the difference between AI opportunities that genuinely deliver value – and those that waste time and money?

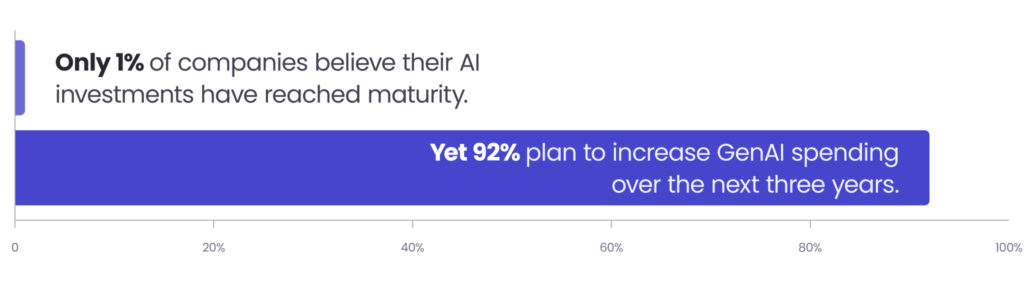

Early findings from MIT NANDA – GenAI Divide: State of AI in Business 2025 suggest 95% of enterprise GenAI pilots show no measurable P&L impact, while only 5% deliver material value. Early evidence, yes – but the trend is hard to ignore.

The problem isn’t faulty models or regulation. More often, it’s the wrong approach. You might think, “That’s just one study” – but other research points in the same direction. For instance, here’s what McKinsey’s Superagency in the Workplace report shows.

The purpose of this guide

So how do we close this gap? A good start is identifying where AI can genuinely add value (inside products or workflows) without turning everything upside down.

This article won’t give you a ready-made list of AI use cases – that’s impossible. The right opportunities depend on your industry, team structure, risk appetite, and even individual creativity.

Instead, we offer a mental framework for spotting AI opportunities. You’ll find practical hints for systematically exploring where AI might bring the most benefit – and, just as importantly, how to avoid common traps.

One last note: this isn’t a step-by-step implementation guide. Building full-scale AI solutions is a separate challenge. The focus here is on how to think about AI opportunities in the first place.

Why Large Language Models changed the game

AI has many branches, but in recent years generative AI models – particularly Large Language Models (LLMs) – have captured the most attention. They’ve proven to be both versatile and valuable. Understanding their strengths helps clarify where they’re most useful.

What makes LLMs different

- Pre-trained knowledge. LLMs come trained on vast, diverse datasets. Out of the box, they already “know” quite a bit about different business domains.

- Flexibility across industries. The same model can write a policy summary one moment, then generate code the next. From healthcare through finance to customer support, the same foundation adapts to multiple contexts.

- Natural language interaction. Because you can communicate with LLMs in plain language, AI-powered features are easier to build and more intuitive for users. Adoption also accelerates as interfaces shift from typing to speech, opening entirely new possibilities.

- Fast evolution. Just a few years ago, these models were dismissed as “just the next token generator”. Today, they’re becoming agentic systems – able to reason, use external tools, and operate with increasing autonomy.

In short: think of an LLM as a highly capable generalist you can “hire”. Given the right context, it can meaningfully contribute to solving real business problems. That’s why so many people are excited, and with good reason.

Limitations and current uses of LLMs

Of course, excitement should be balanced with realism. GenAI is promising, but far from perfect. Some of the key limitations include:

- Hallucinations. Without proper grounding, models can generate plausible but false information.

- Stochastic outputs. The same input doesn’t always produce the same output. Sometimes it’s an advantage, sometimes a challenge.

- Agentic risks. As models gain autonomy, they can occasionally disobey instructions or behave unpredictably, especially with limited human oversight.

- Limited holistic studies. Despite the buzz, research into long-term impacts and real-world adoption patterns is still emerging.

- Dynamic environment. Improvements arrive quickly, but so do new risks and constraints.

What do we know about adoption today?

Recent data from Anthropic’s Economic Index, based on millions of anonymised Claude interactions, paints a clearer picture.

Firstly: AI use today is concentrated in software development and technical writing.

Secondly: usage leans more toward augmentation (57%) – where AI enhances human capabilities – than full automation (43%).

And finally: deep integration remains rare. Only 4% of jobs use AI for at least 75% of tasks. Moderate adoption is more common, with 36% of jobs using AI for at least 25% of tasks.

The takeaway? AI is already useful, but mostly as a collaborator, not yet a full replacement.

Two strategic lenses for AI opportunities

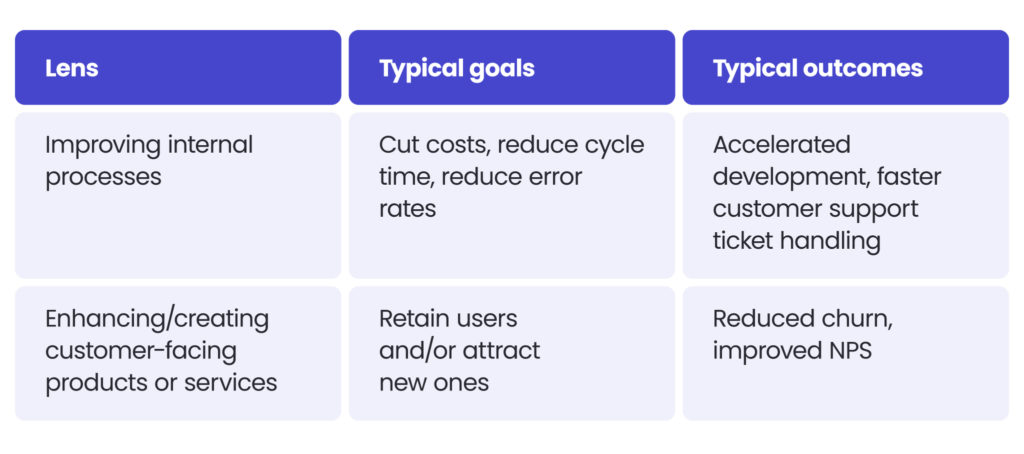

When it comes to practical applications, AI typically adds value in two core categories.

These lenses often overlap. For example, a customer support process is both an internal workflow and a customer-facing service.

The key is to focus on solving real problems, not adding AI for its own sake. For anything customer-facing, be especially mindful of risk and compliance.

The AI opportunity radar

According to BCG, while more than 75% of leaders and managers use GenAI several times a week, usage among frontline employees has stalled at 51%.

That gap highlights a critical truth: simply layering AI on top of existing workflows is not enough. The real value lies in redesigning workflows with AI in mind from the start.

Here are the fundamentals for spotting the highest-impact opportunities:

- Start with value, not technology.

- Anchor everything in a clear business problem.

- Identify and size the pain points or friction.

- Prioritise carefully: avoid solving problems that don’t matter just because the technology is new.

Only after this foundation, map GenAI’s capabilities to potential solutions.

Value vs. Readiness Matrix: a practical filter

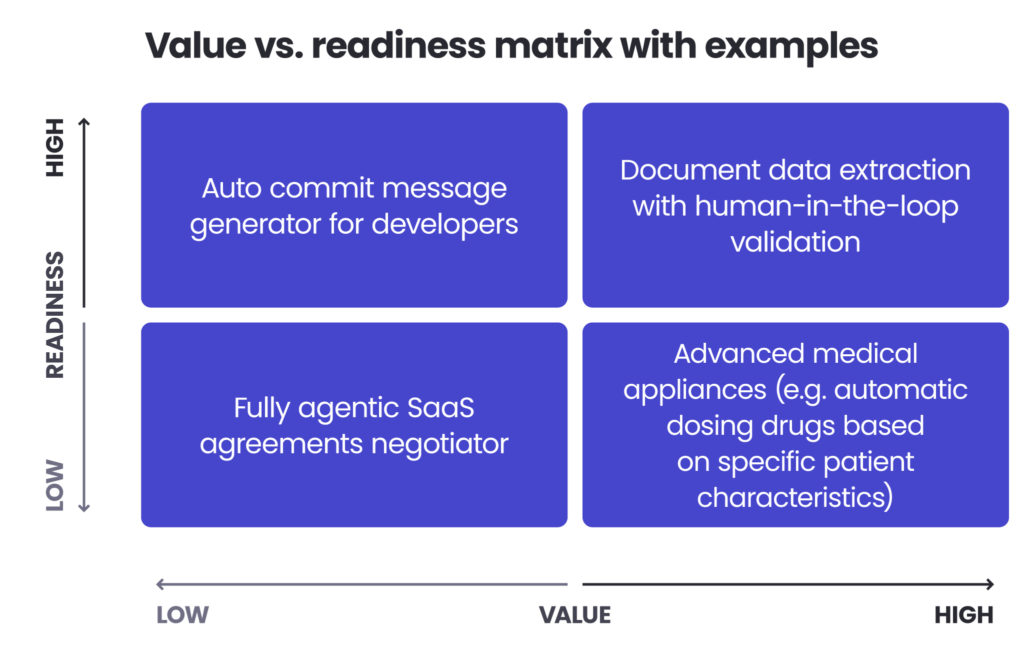

Once you understand AI capabilities and possible use cases, the next step is to rank opportunities by two factors:

- Value = measurable business impact (revenue, cost savings, quality improvements). Consider both the magnitude and frequency of the problem.

- Readiness = your organisation’s ability to implement and sustain the solution, based on technical feasibility, risk and compliance fit, data availability and quality, skills, and adoption appetite.

Think of this as a value vs. readiness matrix – similar to the familiar value vs. effort model, but tailored for AI.

Insight: Readiness isn’t just about regulatory approval. According to Kearney & Futurum, nearly two-thirds of CEOs cite disconnected or low-quality data as the main barrier preventing AI from scaling beyond pilot phases. Robust data readiness is often the decisive factor.

Quick wins and strategy

How do you apply this framework in practice? Let’s look at two examples:

High-value, high-readiness: Document data extraction.

Value: Manual data entry (e.g. invoices, insurance claims) is painful and time-consuming. AI can extract, ground, and visually present data automatically – huge efficiency gains.

Readiness: Technology is already accurate, compliance manageable, and historical documents provide excellent evaluation datasets. With a human-in-the-loop setup, experts oversee the process while gaining speed.

High-readiness, modest-value: Auto commit messages.

Value: Developers save a bit of time with AI-generated commit messages – but the impact is marginal.

Readiness: Technically simple, low risk, and data readily available.

The difference illustrates why both value and readiness matter. Both are worth doing, but for different reasons. The first delivers clear ROI. The second builds internal experience at low risk.

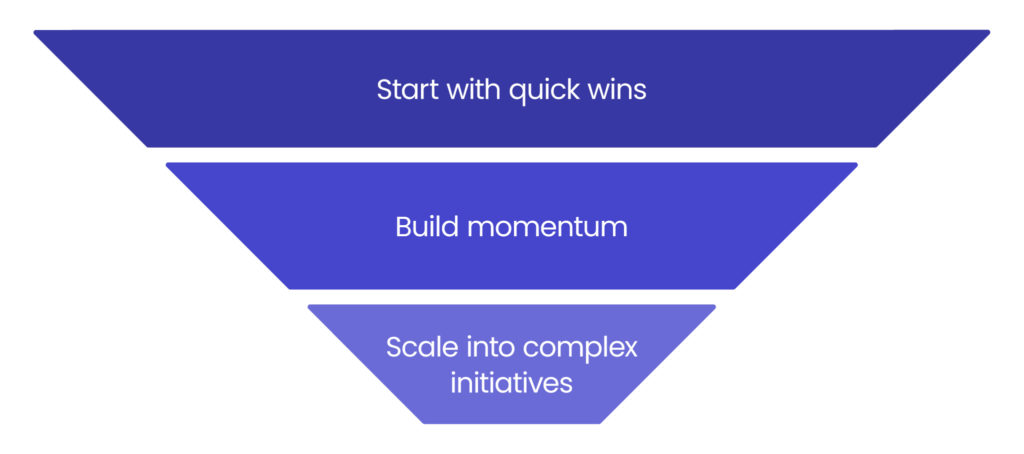

The best strategy?

- Start with high-readiness and high/moderate-value opportunities. These “quick wins” (such as document processing or internal knowledge surfacing) build experience, boost organisational confidence, and deliver meaningful benefits early.

- Use momentum to scale into higher-value, more complex initiatives. This includes core process redesigns or customer-facing AI features.

The key is to build AI capability incrementally and not bet everything on one ambitious, risky project.

Common pitfalls

Even promising AI initiatives can lose momentum or fail. Here are the most common mistakes, and how to avoid them.

1. Treating AI as the “Holy Grail”

What happens:

- Chasing buzzwords without validating real business problems.

- Diving into complex builds before running low-risk initiatives and gaining experience.

- Treating AI as magic that will solve ALL problems.

- Launching too many initiatives at once, lacking real impact.

Warning signs:

- “We want to build something with AI!”

- Too many simultaneous proof-of-concepts.

- Overpromising and unrealistic expectations.

How to avoid:

- Apply the value vs. readiness framework rigorously.

- Dedicate resources to high-value, high-readiness opportunities.

- Define clear success criteria upfront.

- Keep solutions simple: avoid overengineering and unnecessary frameworks, especially early on.

2. Overlooking evaluations and governance

What happens:

- Unexpected model behaviour (e.g. hallucinations) damages trust.

- Deployments stumble on compliance, accuracy, or privacy issues.

- Stakeholder confidence erodes due to unmanaged risks.

Warning signs:

- “We’ll deal with compliance later.”

- Introducing AI into broken processes, making them worse.

- No clear accountability for AI governance.

How to avoid:

- Define evaluation sets early in the process.

- Incorporate human-in-the-loop verification or fallback mechanisms, especially for critical processes/features.

- Engage compliance and legal teams from the start.

3. Ignoring the human factor

What happens:

- Employees resist AI tools, limiting potential benefits.

- Unauthorised use of AI tools emerges when official solutions aren’t available.

- Skills gaps slow adoption.

- Teams delay action, waiting for “future AI” to fix current problems.

Warning signs:

- Minimal or no employee training.

- No safe environment for employees to propose new AI opportunities.

- Employees feel threatened by technology they don’t understand.

How to avoid:

- Involve end users early in design and testing (AI doesn’t change this timeless best practice).

- Foster a bottom-up culture: celebrate early adopter approach.

- Provide tailored training modules, covering both AI’s potential and its limitations.

4. Building in isolation from frontline workers

What happens:

- Central AI or R&D teams design solutions without involving the people who actually use the process.

- The ideas look strong on paper but fail in real-world settings.

- Months of effort are wasted when frontline realities make the solution unworkable.

Warning signs:

- “Improvements” are built entirely in labs or head offices.

- Feedback is collected late, only after pilots launch.

- Workers see new AI tools as imposed rather than supportive.

How to avoid:

- Involve frontline experts from the start.

- Test assumptions directly in real environments – on the shop floor, in customer support queues, or wherever the process happens.

- Treat frontline employees as co-designers: they know the friction points better than anyone.

- Build iteratively with their input to ensure adoption and impact.

Strategic takeaways and next steps

For executives and leaders, spotting real AI opportunities is less about the latest tools and more about disciplined thinking:

- Anchor AI in business value, not technology.

- Use readiness as a filter. Without the right data, governance, or adoption mindset, even promising ideas can stall.

- Balance quick wins and bold bets.

- Avoid common traps like overhyping AI, ignoring governance, or designing in isolation.

AI won’t fix everything, but when applied thoughtfully, it can improve productivity, open new opportunities, and reshape how the work gets done. To truly succeed, you need to approach AI strategically: start small, build confidence, and scale deliberately.

Need AI advisory and guidance?

Contact usThis article gave you the strategic lens. Want to go deeper? Check out the next piece where we roll up our sleeves and look at the practical side: exploring friction points, AI “primitives”, and how your team can begin applying these ideas in day-to-day operations.

About the author

Contact us