API management: the missing piece in scaling AI revolution

As AI moves from pilot projects to enterprise-scale ecosystems, integration has become the real challenge. Traditional API management can’t handle the complexity of LLMs, token costs, and data privacy risks. Learn how the Agent Mesh transforms API management into intelligent infrastructure, enabling cost efficiency and scaling AI in enterprise ecosystems.

Intro: the challenge of scaling AI

Every organisation wants to harness the power of LLMs. But when it comes to scaling those successes across the enterprise, most hit the same wall. Each new model adds complexity, data risk, and unpredictable costs. The missing piece isn’t another AI model, but better infrastructure.

In the early days of digital transformation, APIs were the invisible framework that held systems together. Today, they’re becoming just as critical for artificial intelligence. Because the truth is simple: you can’t scale AI without intelligent API management. That means moving from gateways to interconnected, context-aware networks known as the Agent Mesh.

Why APIs are the new AI infrastructure

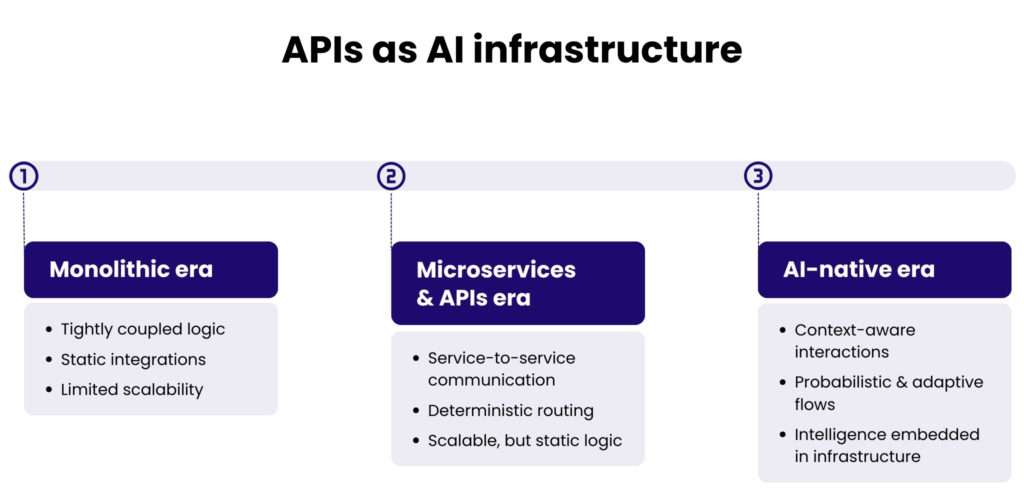

APIs have always been about connection. They made it possible for monolithic systems to talk, for microservices to collaborate, and for digital products to scale.

AI changes what “connection” means. Instead of static requests and predictable responses, AI systems exchange intent, probability, and reasoning. An LLM interprets data, adapts it, and sometimes even makes new decisions on its own. This kind of interaction creates new demands on infrastructure.

Without a new way to manage the complexity, organisations find themselves in chaos. They struggle with:

- Model orchestration complexity,

- Cost explosion from redundant calls,

- Privacy risks from unfiltered prompts,

- Lack of observability across multi-agent workflows.

That’s why the AI revolution needs a new kind of management layer – one that understands semantics, context, and purpose.

From API Gateway to Agent Mesh

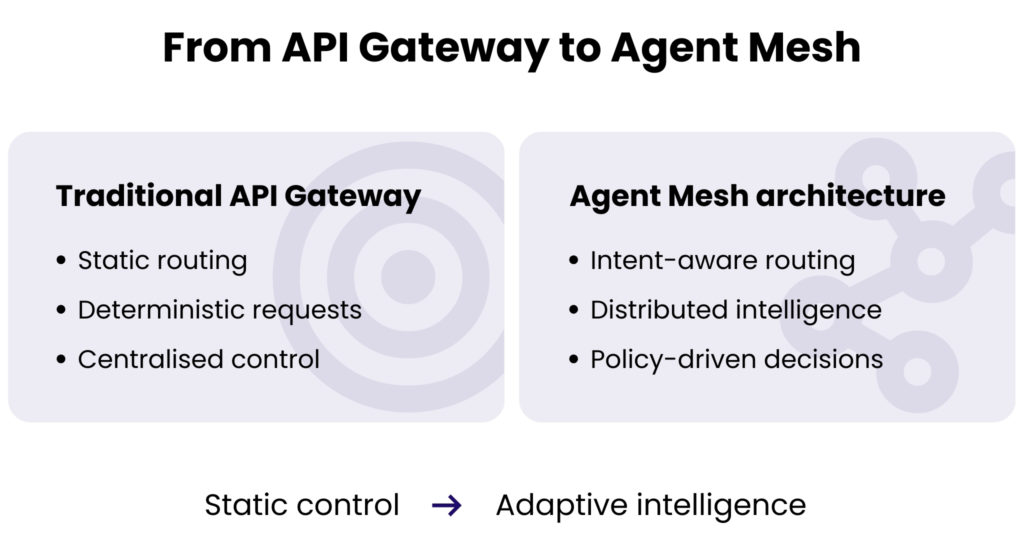

The API Gateway was once a simple solution. It offered centralised control and visibility across distributed systems. It was also built for a deterministic world where you knew exactly which endpoint you were calling and what you’d get back.

AI doesn’t live in that world anymore. In an LLM-driven environment, routes are dynamic, context changes constantly, and systems must interpret meaning, not syntax.

That’s where the Agent Mesh comes into the picture.

An Agent Mesh is a distributed, intelligent fabric connecting multiple Agent Gateways – each responsible not just for routing traffic, but for understanding the intent behind it. Together, they create an infrastructure capable of reasoning about data, policies, and performance.

In this mesh, APIs evolve into autonomous, context-aware services. They don’t wait for instructions but optimise and self-correct in real time. This is how AI ecosystems begin to scale.

Inside the Agent Mesh

In an Agent Mesh, intelligence flows (between models, gateways, and services), guided by a layer of orchestration that’s constantly learning.

Each Agent Gateway contributes part of the system’s collective intelligence:

- Some handle semantic caching, storing meaning-level patterns to reduce redundancy,

- Others manage guardrails that monitor content safety and data privacy,

- Some specialise in token tracking, offering granular insights into cost and performance.

This distributed approach creates something entirely new: a self-optimising ecosystem. Workflows heal themselves, documentation updates automatically, and data protection happens in real time.

Real-life examples

You can see early examples of this already emerging:

- Kestra orchestration: Executes ML pipelines, tracks execution, and self-heals after errors.

- SpecGen: APIs that write and update their own documentation dynamically based on live usage data.

- BlackBird: Repo-to-spec automation – living documentation as a service.

Clearly, AI is moving from being used by APIs to being built into them.

Functional foundations for scaling AI

What makes the Agent Mesh more than a concept are its core functional pillars – each addressing a real limitation of AI infrastructure today.

1. Intelligent routing

Dynamically selects the best model, dataset, or microservice depending on policy, performance, or real-time context.

2. Semantic caching

Recognises semantically similar queries and reuses previous results, reducing both latency and cost.

3. PII masking and redaction

Detects and anonymises personal data across prompts, using models like GLiNER for multilingual, zero-shot named entity recognition.

4. Token tracking

Provides deep visibility into token usage and cost, helping organisations understand how AI workloads scale and where optimisation matters.

5. Guardrails

Protect against prompt injection, hallucination, and sensitive data leakage, ensuring responsible AI use.

Each of these capabilities transforms traditional governance into an architecture that sees, reasons, and reacts. This is how API management evolves into intelligence management.

Securing and optimising the AI ecosystem

Security no longer ends at the endpoint but extends into the semantics of what the system is processing. A traditional API Gateway can enforce authentication or rate limits. An Agent Mesh can detect when a model is asked for something it shouldn’t reveal, or when sensitive data slips into a prompt.

With integrated guardrails and PII detection, the system understands context, not just content. It can flag risks, redact private details, or even adjust responses based on organisational policies.

GLiNER – a multilingual, zero-shot Named Entity Recognition model, detects PII in context, even without labeled data. It recognises names, organisations, addresses, and sensitive entities in multiple languages, ensuring compliance and user trust.

At the same time, the mesh continuously optimises performance. Intelligent routing ensures that workloads are distributed efficiently between providers and models. The result is a system that’s more secure, faster, leaner, and more sustainable.

Ecosystem alignment & future outlook

The rise of the Agent Mesh aligns with a broader shift across enterprise AI architecture. Concepts like MLOps, data observability, and hybrid-cloud orchestration are all converging toward one goal: making intelligence operational at scale.

Explore our High Tech services

Learn moreThe Agent Mesh acts as the connective layer that ties these domains together, ensuring that insights move seamlessly from models to applications, and from data pipelines to decision engines.

Looking ahead, this evolution points toward self-governing API ecosystems – systems that can negotiate, optimise, and secure themselves autonomously. It’s a glimpse of an AI-native service fabric, where intelligence is no longer an add-on to infrastructure but an integral part of it.

Conclusion

As enterprises scale their use of AI, effective API management becomes essential for maintaining control and security. Traditional gateways are no longer enough. The focus must shift from routing calls to orchestrating meaning.

The Agent Mesh represents this next step: an architecture where APIs not only move data but interpret and protect it – where governance is dynamic, and systems learn from every interaction.

By embedding context-awareness and security directly into the infrastructure, businesses can finally scale AI projects responsibly and efficiently.

Spyrosoft helps organisations take that step. Get in touch using the form below to see how our API management expertise supports AI-ready, agent-driven architectures.

FAQ: API management and scaling enterprise AI

Most AI pilots succeed in isolation because they bypass enterprise realities: shared infrastructure, cost controls, compliance, and cross-team dependencies. Scaling fails when each new model or agent is integrated ad hoc. Without intelligent API management, complexity compounds faster than value.

No. While LLMs accelerate the need for an Agent Mesh, the architecture benefits any system with dynamic decision-making, multi-model strategies, or hybrid AI stacks (ML + rules + heuristics). Even predictive or classical ML systems gain from semantic observability and intelligent routing.

By abstracting intent from execution, the mesh allows organisations to swap models, providers, or tools without rewriting integrations. Policies and routing logic live at the orchestration layer, not inside applications, enabling real multi-vendor AI strategies.

No, it complements MLOps. While MLOps governs model training, deployment, and lifecycle, the Agent Mesh governs how intelligence is used in production. It connects models to real-world applications, policies, and users in a controlled, adaptive way.

If your organisation is facing:

– Rapid growth in AI use cases

– Rising LLM costs with unclear ROI

– Increasing compliance or data exposure risks

– Multiple AI vendors or models

…then the Agent Mesh becomes a scaling prerequisite.

About the author

contact us

Ready to explore what’s possible? Let’s innovate, together

our blog