Agent Gateway: how AI changes API management

API management is entering a new era. As AI agents reshape how systems interact, a new paradigm emerges – the Agent Gateway. Learn how intelligent orchestration, semantic caching, and AI-native governance are redefining digital connectivity for the AI age.

Intro: the dawn of intelligent connectivity

The evolution of digital systems has always been about connection: first systems, then services, now intelligence. APIs became the nervous system of digital enterprises – but AI is now the brain that requires a new type of interface.

Welcome to the Agent Gateway era, the next evolutionary leap in API management designed for an AI-native world.

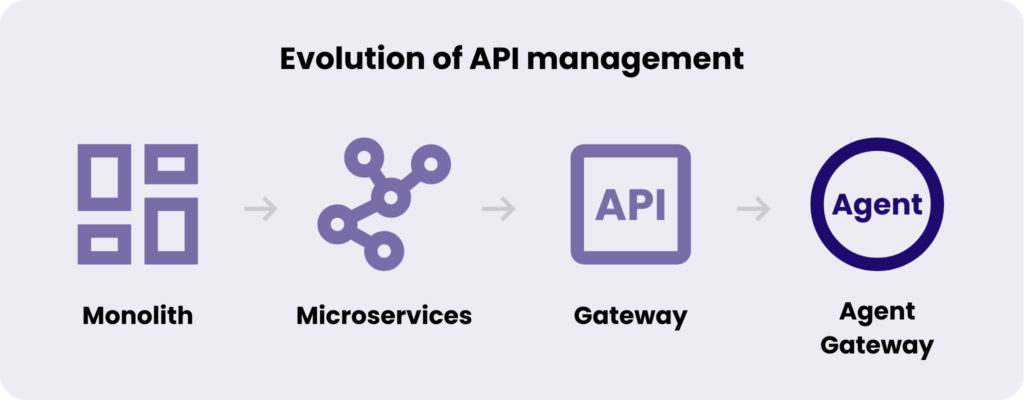

In this article, we explore how API management evolved from monoliths to microservices, and now toward intelligent, AI-driven ecosystems – where APIs don’t just connect systems but help them understand each other.

The evolution of API management

The early years: monoliths & simplicity

Early applications were built as monoliths. One codebase contained everything: product pages, pricing logic, order handling, customer records. That simplicity felt like freedom. While monoliths weren’t always quick to set up, once they were in place teams could iterate rapidly – until every new feature hit another wall. A tiny change in checkout code could require redeploying the entire application. Monoliths taught us the painful lesson that simplicity at the start can result in cascading failures at scale.

The microservices revolution

Then came microservices – a new way to break down applications into smaller, autonomous parts. Teams could ship in parallel, scale only what needed scaling, and adopt technology choices suited to each domain. It brought agility and flexibility but also introduced new problems – increased operational overhead, more moving parts, and far more communication between services. Suddenly, the network replaced a single process, and the architecture became distributed: powerful, but noisier.

The API gateway era

To tame this complexity, organisations adopted API gateways: the central nervous system for distributed systems. Gateways unified security, observability, and access management, enabling scalable growth. For a long time, the gateway was the best engineering trade-off we had. It reduced client-side complexity and restored control. It was the pragmatic glue between teams, clouds and customers.

But the world is changing again. The arrival of AI (especially large language models and agentic workflows) exposes the limits of gateway thinking. Traditional API management expects deterministic calls: a client knows the endpoint; the server returns a predictable schema. AI does not play by those rules. Agents make decisions and reason with context. The contracts we relied on (static routing, predefined contracts, and manual documentation) limit how adaptive systems can become.

Intelligent ecosystems

This is where the next phase begins. The Agent Gateway reframes the gateway role – instead of routing by URL, it routes by purpose. It understands intent: what the caller means, what data is sensitive, which model or service is optimal, and how to protect the context as it moves through the system.

Importantly, this doesn’t replace existing services or their APIs. What changes is the workflow layer above them: AI helps orchestrate when and how APIs are used, and turn static integrations into more adaptive, context-driven sequences.

Just as microservices broke the monolith, AI is now breaking static APIs – not by removing them, but by enabling more fluid, context-aware, self-optimising workflows on top of existing interfaces.

Why AI changes API management

APIs have always assumed predictability. You send a request, you get a response. Artificial intelligence, however, operates in probabilities, not certainties. Instead of following fixed routes, LLMs and AI agents explore multiple paths. They learn, adapt, and make decisions in real time. What used to be a straight line between a client and a server has become a dynamic conversation between intelligent systems.

Through mechanisms like ChatGPT plugins or OpenAI’s function calling, AI models can analyse intent, determine which API is relevant, and invoke it on their own. That’s the essence of intent-driven integration – an ecosystem where the system itself decides what to connect, when, and why. It’s a level of orchestration that traditional API gateways were never built to handle.

This shift exposes a deeper limitation: traditional API management governs requests, not reasoning. As AI agents choose paths dynamically, orchestration itself becomes the architectural concern.

In this new world, the challenges multiply:

- Model orchestration: deciding in real time which LLM, service, or dataset to use for a specific task.

- Context transfer and semantic caching: passing not just data but meaning between models and services, while minimising duplicate or unnecessary calls.

- Privacy and PII management: automatically identifying, masking, and safeguarding sensitive information across dynamic AI workflows.

- Prompt-level observability: tracking, understanding, and controlling how prompts evolve (and what they expose) as they flow through systems.

Each of these problems exposes the same truth: the old tools of API management were never meant for intelligent ecosystems.

The shift is architectural. To thrive in an AI-first landscape, enterprises must move from managing endpoints to managing intent flows (=from supervising requests to supervising reasoning).

And that’s exactly where the Agent Gateway enters the picture: as the connective tissue that understands purpose and makes the unpredictable manageable again.

The Agent Gateway

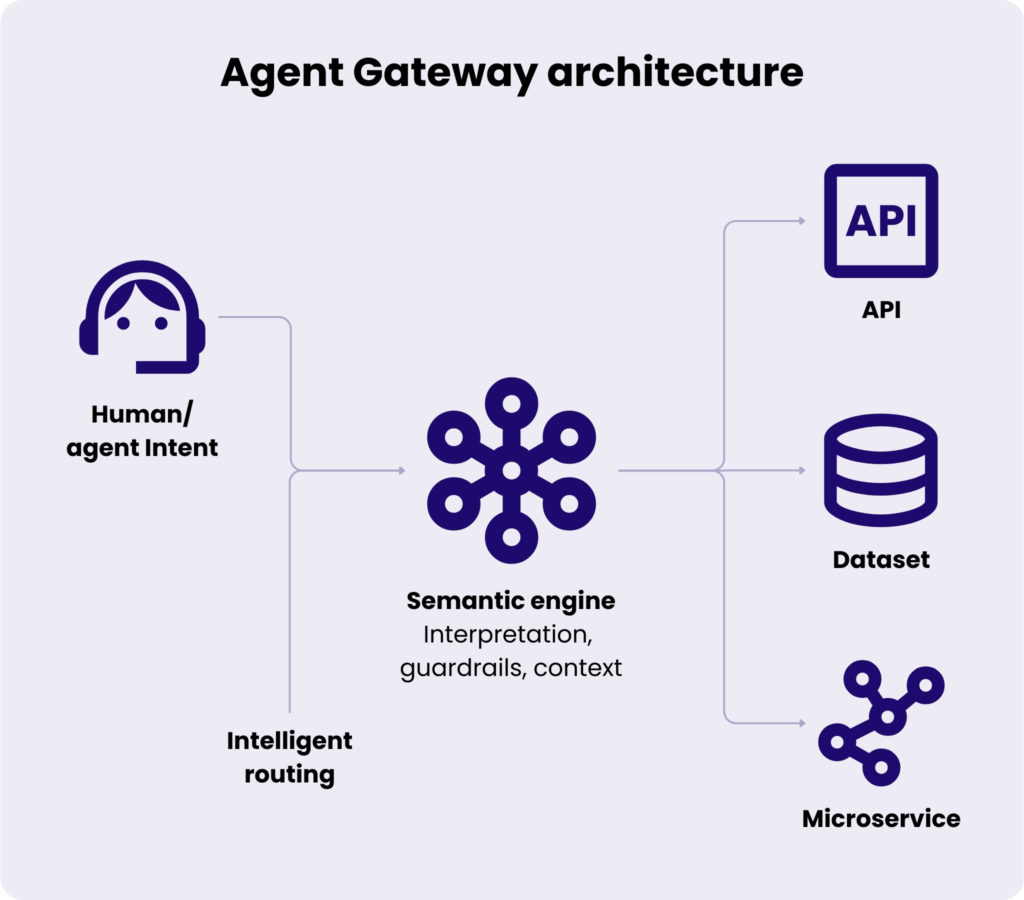

The Agent Gateway emerges in response to a simple but difficult reality: intelligent systems can’t be governed the same way deterministic ones are.

It’s an AI-native orchestration layer connecting humans, APIs, and intelligent agents. It doesn’t just pass data between endpoints, but interprets, evaluates, and safeguards it.

Instead of routing by endpoint, it routes by intent and context. It can decide, for instance, which model, dataset, or microservice best serves a given goal, based on real-time signals.

Functional pillars of the Agent Gateway

- Intelligent routing – automatically selects the most efficient model or service for each request.

- Semantic caching – stores semantically similar queries to improve speed and reduce compute costs.

- PII masking & redaction – detects and protects personally identifiable information in real time.

- Token tracking – monitors how AI tokens are used, bringing visibility into performance and cost.

- Guardrails – ensures safe and compliant AI use by preventing data leakage or malicious prompts.

Together, these features redefine API governance. What used to be about managing traffic now becomes AI observability and adaptive control – a key step toward intelligent infrastructure.

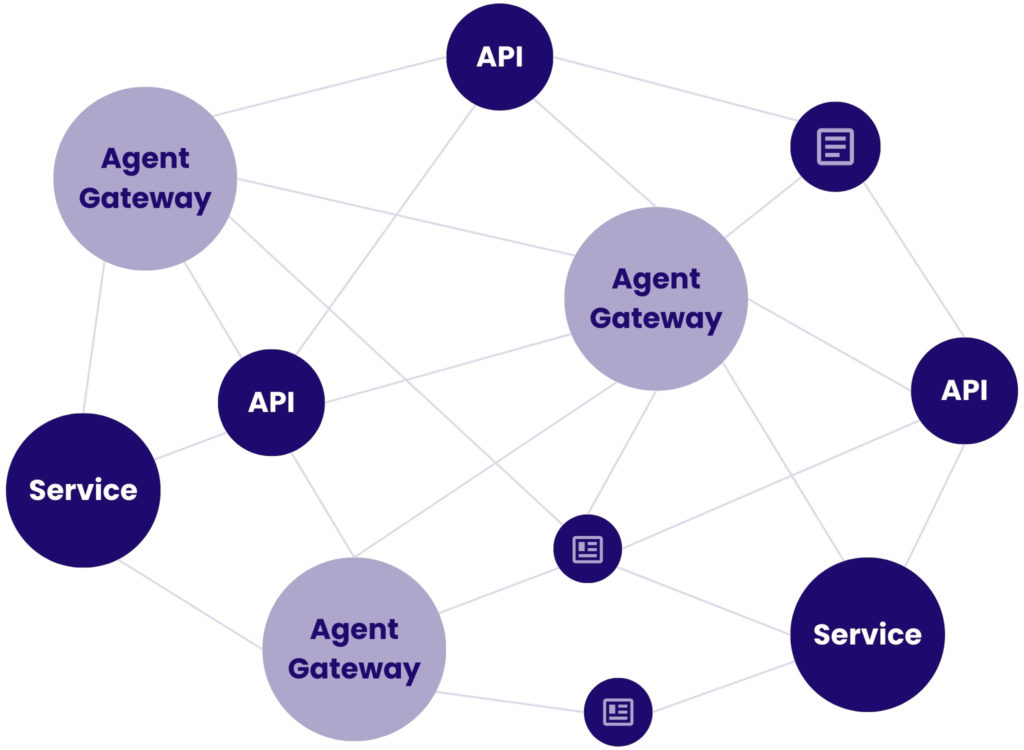

The Agent Mesh

When multiple Agent Gateways connect, they form an Agent Mesh: a web of intelligent, self-optimising services that communicate through meaning, not hard-coded logic.

In this ecosystem:

- APIs evolve into self-aware nodes that understand intent.

- Services collaborate, negotiate, and optimise routing in real time.

- AI orchestration tools (like Kestra) execute ML pipelines, track executions, and self-heal when failures occur.

- Projects like SpecGen and BlackBird demonstrate this evolution — APIs that can literally generate and update their own documentation based on real usage data.

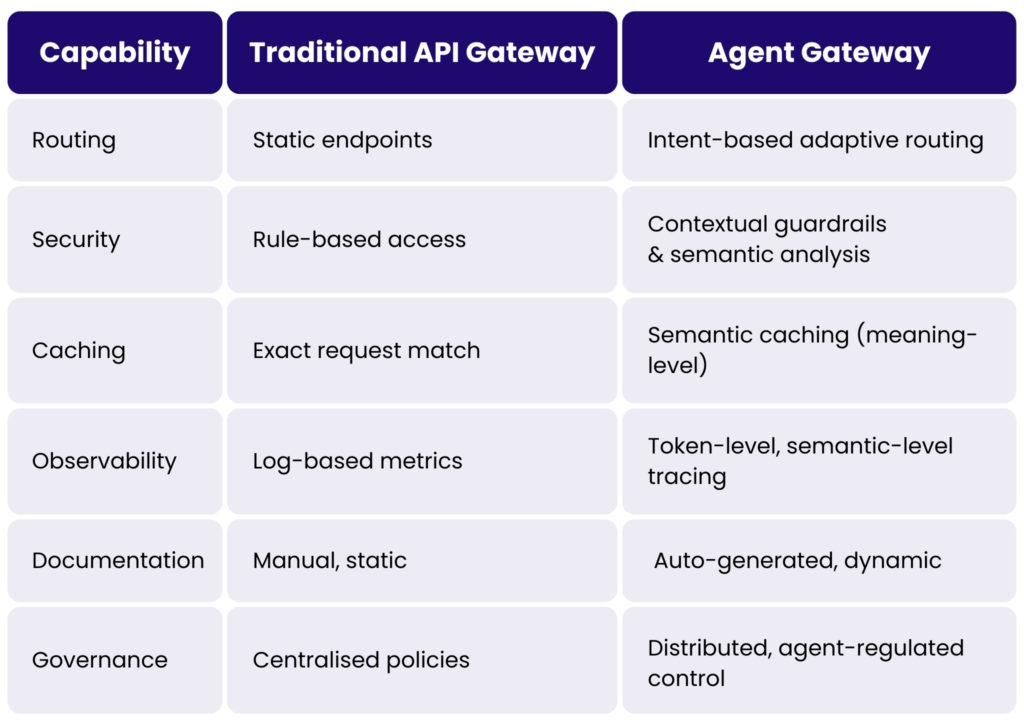

Comparison: traditional vs. agent-driven API management

Where the shift becomes most visible is in how traditional gateways and agent-driven approaches differ in practice.

What it means for technology leaders

For CTOs and CIOs

For technology leaders, this shift is a fundamental rethinking of how digital ecosystems operate. The move toward AI-native API management demands new skills, new architectures, and a new philosophy of control.

Traditional API management was about structure and predictability. But in an agentic environment, control becomes orchestration. CTOs and CIOs must now oversee systems that reason, adapt, and make autonomous decisions.

From a governance perspective, the Agent Gateway also adds a critical layer of security and visibility. Instead of scattered safeguards, sensitive data handling and compliance rules can be enforced consistently across AI workflows. Detailed logging makes it possible to see what happens beneath the surface – which models are invoked, how tokens are used, and how decisions evolve over time.

Observability, too, takes on a new meaning. It’s no longer enough to know what was called – leaders must understand why. Modern observability tracks prompt usage, model drift, and end-to-end decision flows, offering insight into how agents choose, reason, and evolve over time.

The emergence of new roles

New roles are already emerging. AI Orchestration Engineers will design workflows that blend APIs and models. AgentOps specialists will maintain fleets of intelligent agents – monitoring, optimising, and securing them. Some organisations will even introduce an AI Agent Orchestrator, a role that oversees the performance and collaboration of autonomous systems across the enterprise.

At the same time, AI Ethics and Governance Officers will become essential. They’re responsible for maintaining transparency, trust, and compliance in agentic decision-making.

Explore our High Tech services

Learn moreFor Heads of Product and Innovation

For product and innovation leaders, the Agent Gateway opens an entirely new canvas for experimentation.

Where traditional integration models slowed teams down with dependencies and documentation, AI-native connectivity accelerates innovation. Products can now tap into intelligent ecosystems that adapt in real time.

The Agent Gateway creates a foundation where new AI-driven capabilities can be deployed incrementally. Instead of replacing existing systems, teams can layer intelligence on top of what already works.

API management: the path forward

For many enterprises, the transformation will be gradual. Re-architecting everything at once isn’t realistic. The smarter approach is to layer Agent Gateway capabilities on top of existing infrastructure, enabling AI-native behaviour step by step.

That’s where Spyrosoft acts as a trusted engineering partner. We help organisations bridge from static gateways to dynamic, intelligent ecosystems, designing a roadmap that introduces semantic monitoring, automated routing, and AI observability – without disrupting core operations.

Our goal is simple: to make the journey from APIs to agents strategic and sustainable.

Conclusion

Static API management is no longer sufficient on its own. What comes next is dynamic, intelligent collaboration – systems that exchange understanding.

As AI agents multiply across enterprises, APIs are evolving from technical endpoints into living components of an intelligent mesh. The early signs are already here: autonomous agents calling APIs, models orchestrating workflows, systems learning from their own interactions.

To lead in this new landscape, organisations have to treat API management as an AI-first discipline. That means replacing hand-written documentation with self-describing interfaces or fixed routing with intent-driven orchestration.

The Agent Gateway stands at the centre of this transformation. It bridges the world of human intent, traditional software, and machine intelligence – translating purpose into action. Step by step, it enables companies to evolve toward architectures that are not only connected but also cognitive.

In the age of AI, success doesn’t belong to those who build the loudest systems – it belongs to those who build the ones that listen. Contact us via the form below to learn how we respond to the needs of AI agents in our API management projects.

Sources: We’ve drawn on industry research and expert analyses throughout this overview, including: Imaginet.com, Business-reporter.com, Apideck.com, Portkey.ai, Gravitee, Medium.com, CIO. These insights underline that the Agent Gateway is not science fiction, but the next logical step in API management for an AI world.

FAQ: AI-native API management

The Agent Gateway is best understood as an architectural pattern rather than a single off-the-shelf product. Different vendors may implement parts of it (routing, guardrails, observability, orchestration), but its real value lies in how these capabilities are composed. In practice, organisations may build it using a combination of existing gateways, AI orchestration tools, LLM providers, and custom logic.

No. One of the core strengths of the Agent Gateway approach is that it layers intelligence on top of existing APIs. Your current services remain intact. The gateway operates at the orchestration level, deciding when, how, and why APIs are invoked.

Agent Gateways are designed with observability and guardrails precisely because AI systems are probabilistic. When a suboptimal decision occurs, the gateway provides traceability: which intent was inferred, which model or service was chosen, and why. This makes failures debuggable, auditable, and improvable.

Instead of scattering compliance rules across services, the Agent Gateway centralises policy enforcement at the reasoning layer. This makes it easier to enforce GDPR, data residency, or sector-specific regulations consistently (even when AI agents dynamically compose workflows across multiple systems and vendors).

The most effective path is incremental adoption:

– Introduce semantic observability and token tracking.

– Add intent-based routing for selected use cases.

– Layer in guardrails and PII protection.

– Gradually expand orchestration across domains.

This is the approach Spyrosoft recommends: evolving toward AI-native connectivity without destabilising existing platforms.

About the author

contact us

Ready to explore what’s possible? Let’s innovate, together

our blog