Transforming ROS 2 development with rtest

Imagine your team has just delivered a key feature in a commercial robotics system. You know the codebase is in bad shape. As a developer, you feel it every day. But leadership is focused on delivering features, not quality. So you keep moving fast, stacking up technical debt. The system grows more complex. CI pipelines start to flake. Everyone knows they’re unreliable, but no one addresses it — it’s easier to ignore than to fix. Eventually, it breaks down. Pipelines fail more often than they pass. No one can push safely. Deployment becomes a gamble. Then, it hits. A critical hotfix needed yesterday. And the whole delivery system is too fragile to respond.

This issue kept showing up across robotics projects — especially the ones built on ROS 2. What should have been straightforward development turned into a daily battle with flaky, unpredictable tests.

We had hundreds of tests, but no real confidence in them. Every code change triggered multiple CI runs, eating up hours of developer time. Frustration set in. Engineers weren’t coding — they were waiting. And worse, they were waiting on results they couldn’t trust.

It wasn’t just annoying. It was slowing everything down. As the project grew, so did the chaos. Developers spent more time debugging the tests than building the product. Releases felt like rolling the dice — a failed test might mean a real issue, or it might be nothing at all. Either way, it chipped away at trust in the test suite. Everyone got more cautious. Progress stalled.

One of our clients decided to break the cycle. No more band-aids or one-off fixes. They needed a real solution — a better foundation for testing in ROS 2.

Understanding the ROS 2 testing challenge

To fix the problem, we first needed to understand it. The root cause wasn’t our test code. It was the nature of ROS 2 itself. Built for distributed, modular robotics systems, ROS 2 is incredibly powerful, but its architecture makes unit- testing complex by design.

At the core is an asynchronous communication model. Messages pass between nodes through multiple layers, all the way down to middleware implementations like DDS. This flexibility is great for real-world applications, but it introduces unpredictability during test execution. Message timing, race conditions, and system state can all vary from one test run to the next.

In short: ROS 2 wasn’t built for isolated unit testing out of the box. That’s what we set out to change.

ROS 2’s architecture, while powerful for distributed robotics, introduces a perfect storm of variables that make consistent test results difficult to achieve.

Timing is one of the biggest culprits. System load and CPU scheduling can vary between test runs, leading to different outcomes even with the same code. Hardware differences between local environments and CI pipelines can change how processes behave. Add to that the nondeterministic nature of middleware communications and multithreaded operations, and you get race conditions and timing-dependent failures that appear randomly and disappear just as quickly.

Service discovery adds another layer of complexity.

ROS 2 nodes rely on DDS to find each other through timing-sensitive handshakes, QoS negotiations, and broadcast messages. In test environments, this process can behave differently depending on system load, network conditions, or even the sequence in which nodes launch. A test might pass when the system is idle, but fail under stress and not because of a bug, but because node discovery took slightly too long.

It’s worth adding that issues like service discovery and race conditions can probably be addressed — one way or another. But solving them usually means extra effort, boilerplate code, and no guarantee that the fix will scale across all scenarios.

Sure, Fast DDS offers static discovery to get around some of these problems. But do we really want to dive into that level of system tuning just to run unit tests?

We could switch to Zenoh, or even build our own test middleware. But the fact that we’re even considering these kinds of workarounds just reinforces the core issue: we don’t need more patchwork. We need a systematic solution.

Message delivery timing is also unpredictable. A publisher might send a message, but there’s no guarantee a subscriber will receive it within a fixed window. Traditional workarounds like inserting arbitrary delays or polling are fragile and tend to increase test complexity and runtime without improving reliability.

The result? A testing process that can’t be trusted.

Code that works perfectly in development may fail in CI not because it’s broken, but because the infrastructure doesn’t provide the determinism needed to validate it.

A new approach to ROS 2 testing: Introducing rtest

After facing the same testing issues across multiple robotics projects, we knew the community didn’t just need better workarounds. It needed a better foundation. So, we built one.

Rtest is a purpose-built framework that brings predictability, efficiency and reliability to ROS 2 unit testing, without requiring changes to your production code.

Targeted interception at the rclcpp level

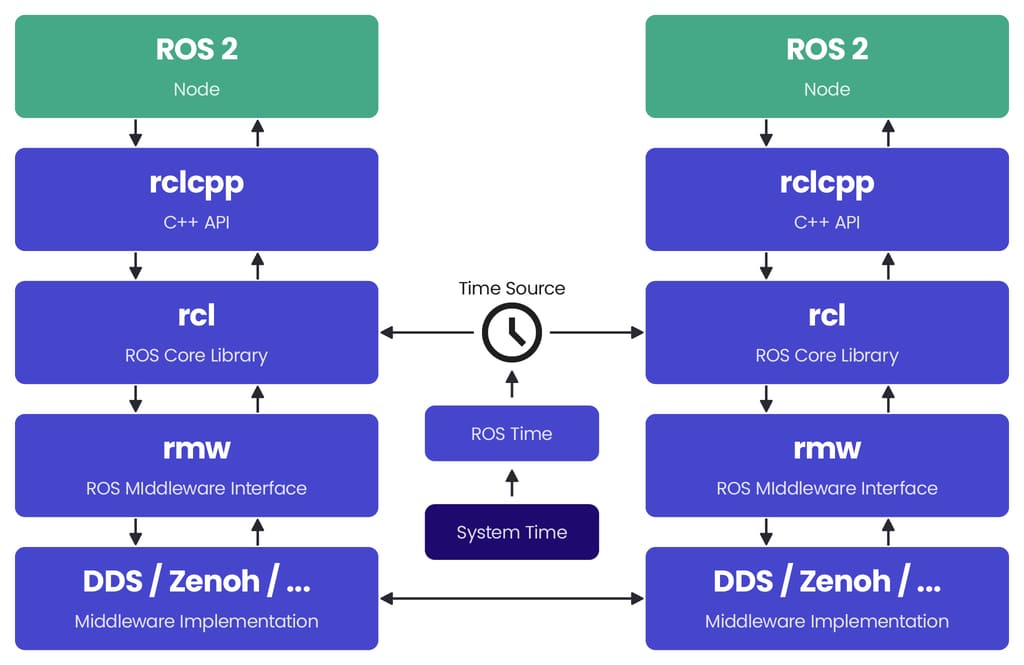

The key idea behind rtest is knowing exactly where to step in during ROS 2 communication. Instead of trying to control the entire middleware system, rtest smartly catches communication at the rclcpp level. That’s the C++ library that sits between your code and the lower-level ROS parts. The diagram in our documentation illustrates the typical flow of ROS 2 communication, from your nodes down through rclcpp, rcl, rmw and finally to the communication layer implementation like DDS or Zenoh.

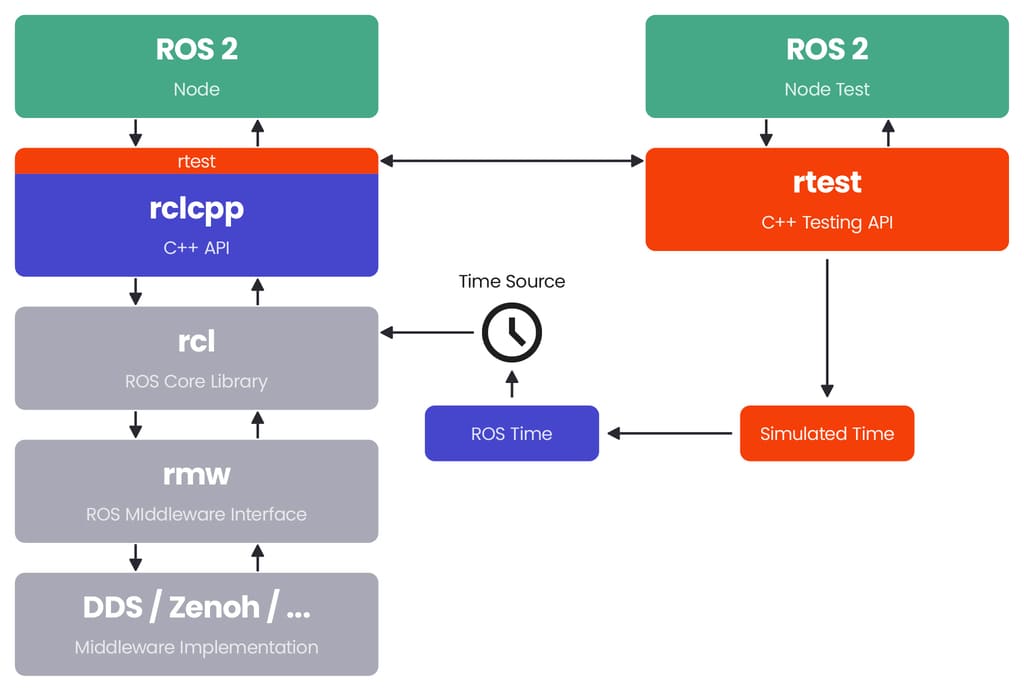

Mocking the rclcpp layer without modifying your code

The beauty of rtest’s approach is that it creates mock implementations at the rclcpp layer, effectively isolating your tests from all the unpredictable elements below. Your node code remains completely unchanged, it still creates publishers, subscribers, timers and services exactly as it would in production. But instead of these components relying on the full ROS 2 stack with all its timing complexities, they connect to rtest’s controlled mock implementations. By intercepting at the rclcpp level, we maintain full API compatibility while gaining complete control over the communication flow and time delivery.

Rtest comes as a complete library that includes a full framework for mocking all the important ROS 2 components:

• Subscribers

• Publishers

• Services

• Timers

• Clock

Everything you need is packaged together, so you don’t have to worry about the internal implementation details.

Substitution of ROS 2 APIs

When you include rtest in your test build, it uses compile time substitution to redirect rclcpp’s component creation functions to rtest.

Your code continues to call the same create_publisher(), create_subscription() and create_service() methods, but they now return mock enabled objects instead of standard ROS 2 components. Your production code remains completely unchanged, it still calls the same APIs and follows the same patterns.

Isolated testing with full control

However, instead of these components routing through the unpredictable middleware layers, they connect directly to rtest’s controlled mock implementations.

Your tests execute in a single-threaded environment, which means you can trace through complex state changes without worrying about race conditions or timing dependent behaviours. You can examine your node’s internal state at any point and verify expected outcomes with standard debugging tools. This deterministic execution model eliminates an entire class of debugging challenges that plague traditional ROS 2 testing.

We are currently focusing our efforts on supporting the latest ROS 2 distributions. Full compatibility with ROS 2 Jazzy is already available, and we are actively working on extending support to ROS 2 Kilted Kaiju (by the time you’re reading this, it may already be ready). Older distributions will be added incrementally to ensure consistent API behaviour across versions, even as the underlying rclcpp layers evolve. Our goal is to make test upgrades as seamless as possible, allowing developers to move to newer ROS 2 versions without spending hours adapting their test code.

CMake integration with zero friction

Rtest integrates seamlessly with the ROS 2 build system through CMake utilities that automatically create test versions of your existing libraries. The key part is integrating with your build system at the CMakeLists level in the BUILD_TESTING section.

The test_tools_add_doubles function generates mock-enabled versions of your components without duplicating source code or requiring separate build configurations. The function analyses your existing target’s source files and creates a parallel test target that links against rtest’s mock implementations. Your original production target remains completely unchanged, ensuring that there’s no risk of test code accidentally affecting production builds. The build system integration also handles dependency management automatically. When you specify AMENT_DEPENDENCIES for your test doubles, the system ensures that all necessary ROS 2 packages are properly linked with their mock enabled versions.

This eliminates the tedious manual work of managing test specific dependencies and reduces the chance of configuration errors that could lead to subtle test failures. That means you can retrofit existing projects with comprehensive testing capabilities without restructuring your build configuration. Your production builds remain unchanged, while test builds automatically include the necessary mock implementations.

Writing tests with rtest

Once you set that up following our examples, you can write your own tests using the framework. Here’s a simple case from our examples that shows how easy it is to test a ROS 2 publisher for your node:

TEST_F(PubSubTest, PublishIfSubscriuptionCountNonZeroTest)

{

/// Create and find a publisher instance

auto node = std::make_shared<test_composition::Publisher>(opts);

auto publisher = rtest::findPublisher<std_msgs::msg::String>(node, "/test_topic");

/// Set subscription count to 1 to activate the successful publish logic

publisher->setSubscriptionCount(1UL);

/// Prepare the expected message that should be published

auto expectedMsg = std_msgs::msg::String{};

expectedMsg.set__data("if_subscribers_listening");

/// Set up expectation that the Node will publish a message when the subscription count is 1

EXPECT_CALL(*publisher, publish(expectedMsg)).Times(1);

/// Trigger the publishing logic in the production node

node->publishIfSubscribersListening();

}

This test shows the power of rtest in just a few lines. You create your node normally, then use rtest::findPublisher to interact with the publisher in tests. You can control things like subscription count, set up expectations for what messages should be published, and then test your node’s behavior directly.

Fast, predictable, and repeatable test execution

Traditional ROS 2 tests must account for message passing delays, service discovery times, and other middleware overhead.

Rtest tests execute orders of magnitude faster because they bypass all network communication and run in a controlled environment. No waiting for actual ROS communication, no timing issues, no randomness, just clean predictable testing that tells you exactly whether your code works as expected. Beyond speed, the reliability improvement fundamentally changes how teams approach testing. With traditional ROS 2 tests, developers often run tests multiple times to ensure they pass consistently, a practice that wastes time and erodes confidence.

With rtest, a test that passes once will pass every time under any conditions, allowing developers to trust their test results completely.

Uses familiar Google Test syntax

We support Google Test (gtest), which means the testing syntax is well known to what most C++ programmers are already used to — you don’t need to learn a completely new testing framework or convince your team to adopt unfamiliar tools. If you already know how to write EXPECT_CALL, ASSERT_TRUE, or TEST_F, you already know how to use rtest. This familiar approach removes one of the biggest barriers to adoption that many testing frameworks face.

Automatic component tracking via static registry

One of rtest’s most powerful features is its automatic component tracking through the Static Registry Pattern.

When your nodes create ROS components during initialisation, rtest automatically registers them in a centralised registry indexed by node name and topic or service name. This happens transparently without any configuration or setup code on your part. The registry implementation uses weak pointers to avoid creating circular dependencies or memory leaks. When components are destroyed, they’re automatically removed from the registry without requiring explicit cleanup code. This design ensures that the registry remains lightweight and doesn’t interfere with normal object lifecycle management.

During test execution, you can retrieve any component your node created using simple finder functions like findPublisher, findSubscription, or findService. The registry maintains weak references to avoid memory leaks and automatically handles component lifecycle management. The registry also provides introspection capabilities, allowing you to verify that your node created the expected components. You can check that a publisher was created for a specific topic, confirm service endpoints exist, or validate that timers were properly initialised.

The registry also supports more advanced scenarios, such as finding all components of a specific type created by a node, or iterating through all topics a node publishes to. This capability is particularly useful for integration tests that need to verify the complete interface of a complex node.

More advanced example: service testing

ROS 2 services add another layer of complexity to testing due to their request-response nature and potential for network failures.

Rtest provides sophisticated mocking capabilities for both service providers and clients, allowing you to test various scenarios including service unavailability, timeout conditions, and different response patterns. For service clients, you can mock the underlying client behaviour to simulate network conditions, service failures or specific response patterns. For service providers you can directly inject requests and verify that your service logic produces the expected responses.

This level of control makes it possible to thoroughly test error handling, retry logic and edge cases that are difficult to reproduce in real network environments. If achieving high code coverage is a priority in your project, this approach is often the only reliable way to force certain testing scenarios that occur very rarely in typical runtime conditions.

Here’s an example of testing a service client with rtest:

TEST_F(ServiceClientTest, WhenServiceCallSucceeds_ThenSetStateSucceeds)

{

/// Create node

auto node = std::make_shared<test_composition::ServiceClient>(opts);

/// Retrieve the client created by the Node

auto client = rtest::findServiceClient<std_srvs::srv::SetBool>(node, "/test_service");

/// Check that the Node actually created the Client

ASSERT_TRUE(client);

/// Create successful response

auto response = std::make_shared<std_srvs::srv::SetBool::Response>();

response->success = true;

response->message = "State updated successfully";

/// Set up expectations

EXPECT_CALL(*client, service_is_ready()).WillOnce(::testing::Return(true));

/// Mock sending a service request asynchronously

EXPECT_CALL(*client, async_send_request(::testing::_))

.WillOnce([response](std::shared_ptr<std_srvs::srv::SetBool::Request>) {

std::promise<std::shared_ptr<std_srvs::srv::SetBool::Response>> promise;

promise.set_value(response);

We will do it in the future, right now we have a good starting point, let's leave this code for article purpose // Return the simulated future response

return rclcpp::ClientTypes<std_srvs::srv::SetBool>::FutureResponseAndId(

promise.get_future(),

1UL

);

});

/// Call the method that triggers the service request and validate the behavior

EXPECT_TRUE(node->setState(true));

EXPECT_TRUE(node->getLastCallSuccess());

EXPECT_EQ(node->getLastResponseMessage(), "State updated successfully");

}

This example shows how rtest enables precise control over service interactions, allowing you to test both successful and failure scenarios with complete predictability.

Benefits of rtest in your project

Check out our robotics development and consulting services

Learn moreWhen you integrate rtest in your project, several transformations happen immediately.

Tests become truly deterministic, tests that pass once will pass every time, and when they fail, they will always do, making sure no bug is left uncovered. This reliability creates a foundation of trust that enables faster, more confident development.

Developers no longer need to mentally account for test infrastructure unreliability when diagnosing failures. When a test fails it’s because of the code being tested, not because of timing issues or environmental factors. This clarity dramatically improves debugging efficiency and developer satisfaction.

The combination of faster test execution and reliable results enables more frequent testing, leading to earlier detection of issues and higher overall code quality. The framework also makes it practical to achieve high code coverage targets as edge cases and error conditions become easily testable.

Community and future

Rtest was developed as a collaboration between Spyrosoft and Beam.

We owe special thanks to Sławomir Cielepak, whose vision and technical expertise were instrumental in creating this solution. We’re also grateful to Guy Burroughes and Gary Cross for their invaluable support throughout the development process.

We invite you to experience the transformation for yourself.

Check out our GitHub repository and rtest documentation to start writing more reliable tests for your robotics applications. We welcome contributions from the community to extend and improve rtest.

Join us in making reliable testing a standard practice in the ROS 2 ecosystem!

About the author

CONTACT US