AI-powered quality assurance in production – post delivery phase

Many teams assume that ‘delivery‘ is the end goal. Once the release is approved and the system goes live, the quality assurance team steps aside. In truth, however, this is when quality faces its sternest test: real users, live data, unpredictable workloads and relentless change.

Modern systems evolve ceaselessly. Cloud-native architectures, frequent releases, infrastructure adjustments, configuration changes and third-party dependencies mean that production environments are constantly changing. In this landscape, it is impossible to guarantee quality through pre-release testing alone. Rather, it demands continuous monitoring, validation and refinement post-deployment.

Post-delivery quality assurance redefines the discipline, shifting the focus from identifying defects before release to preventing regressions, detecting anomalies early and maintaining stability over time. By integrating QA with DevOps, observability, and incident management, it transforms quality into an ongoing pursuit rather than a one-off milestone. This is where AI can be transformative. By analysing large amounts of production data, test results and usage patterns, it enables QA teams to work more efficiently, prioritising high-impact issues, identifying risks earlier and improving quality without additional effort.

In this article, we will examine our approach to post-delivery quality assurance and the practices that underpin the long-term reliability of systems. We will also explore the ways in which AI can equip teams to sustain excellence long after launch.

Embedding quality into the post-release lifecycle

Post-delivery quality assurance is an ongoing process that focuses on maintaining system quality in live production conditions. Once a system is live, its quality must be validated based on real users, real data and real operational constraints rather than assumptions made during development.

Our approach positions QA as a long-term partner throughout the product lifecycle, working closely with the development and DevOps teams. The key objectives of post-delivery QA include:

- ensuring the functional stability of critical business workflows,

- maintaining non-functional quality, such as performance, reliability and scalability,

- preventing regressions introduced by frequent releases, configuration changes or infrastructure updates,

- validating incident fixes to eliminate root causes and reduce recurrence.

We implement a production-aware QA model which uses observability data from live environments, such as logs, metrics, traces and usage patterns. This allows QA teams to identify potential quality issues early on, based on actual system behaviour. They can also pinpoint discrepancies between expected and actual usage, and refine test coverage and priorities continuously.

Post-delivery QA is integrated into delivery and operational workflows. Regression testing and quality checks are carried out continuously and prioritised using a risk-based approach, focusing on components that have the greatest business impact and the highest probability of failure.

In this model, quality is an ongoing process, not a one-time release activity. Rather, it is an iterative, measurable process that promotes system stability, consistent operations and long-term business value.

Read more about our approach to QA PreDelivery stage >>

See our Managed Services offerings!

Find out moreKey practices that enable quality in production

Production monitoring & quality observability

In post-delivery QA, monitoring goes beyond just infrastructure health and uptime. The focus is on end-to-end quality observability, covering functional and non-functional aspects of the system under real operating conditions.

QA teams continuously analyse:

- functional signals, such as error rates, failed transactions and broken user journeys,

- performance indicators, including response times, throughput, latency distribution and resource utilisation,

- user behaviour patterns, which reveal how features are actually used and where friction occurs.

These signals allow QA teams to identify emerging issues before they escalate into incidents. Importantly, quality metrics go beyond basic availability. Quality KPIs include transaction success rates, degradation thresholds, error recurrence and performance consistency under load. This broader view enables teams to measure what truly impacts user experience and business outcomes, rather than just whether the system is running.

Regression prevention

As systems evolve through frequent releases, configuration changes and infrastructure updates, the risk of regression becomes one of the primary threats to stability. Post-delivery QA focuses on continuous regression prevention rather than periodic regression testing.

This includes:

- executing regression tests automatically after deployments, hotfixes and environmental changes;

- prioritising test coverage for business-critical workflows and high-risk components;

- adjusting regression scope dynamically based on change impact and historical failure data.

By embedding regression checks into delivery pipelines, QA teams can help to reduce risk during incremental releases and emergency fixes. This approach enables organisations to act swiftly while maintaining confidence that existing functionality remains intact.

Incident validation & root cause analysis

Resolving an incident does not end with the deployment of a fix. Post-delivery QA is critical in validating that issues have been resolved and similar failures are unlikely to reoccur.

Key responsibilities include:

- verifying fixes in production-like conditions;

- confirming that symptoms and root causes have both been addressed;

- ensuring that no regressions were introduced during incident resolution.

QA teams also contribute to root cause analysis by correlating incident data with test coverage, recent changes and production signals. Lessons learned from incidents are fed back into test scenarios, monitoring rules and quality metrics. This learning loop transforms production issues into long-term quality improvements rather than repeated operational problems.

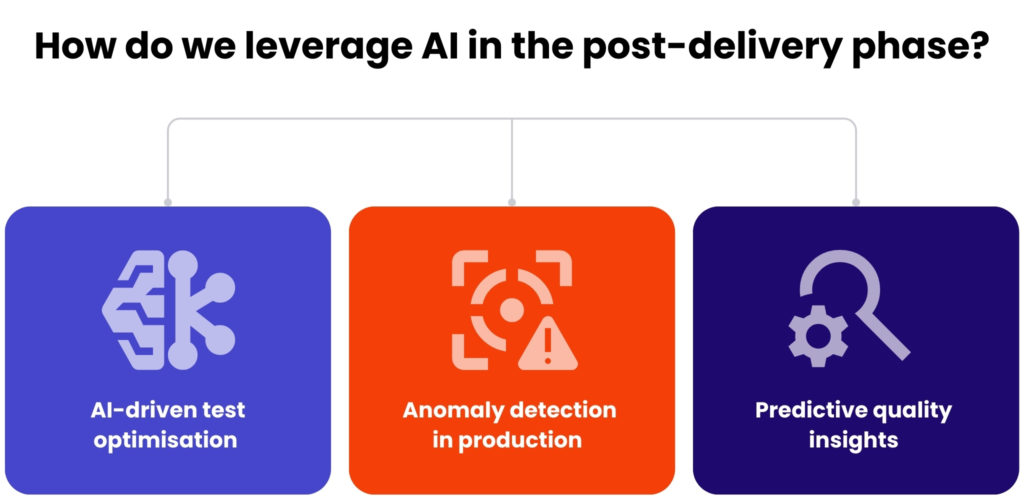

Applying AI to scale post-delivery QA

Post-delivery quality assurance generates large volumes of data, including test results, deployment logs, production metrics and user behaviour signals. At this scale, manual analysis and static QA strategies quickly become ineffective. AI enables QA teams to process this data effectively, prioritise their efforts and respond to risks more quickly without increasing operational overheads.

Rather than replacing established QA practices, we treat AI as a facilitator, enhancing decision-making, focus and scalability across post-delivery quality activities.

AI-driven test optimisation

In post-delivery environments, it is rarely practical to run full regression suites after every change. Systems evolve continuously, so QA efforts should focus on areas of real risk rather than static coverage. AI can support intelligent test optimisation by linking test execution to the impact of changes and actual production usage.

By analysing code changes, historical test results and usage patterns, AI can prioritise the most relevant tests and reduce the number of low-value executions. As the system evolves, regression suites adapt, enabling teams to shorten feedback cycles, maintain confidence in releases and protect critical functionality without slowing down the delivery.

Anomaly detection in production

Traditional monitoring relies on predefined thresholds and known failure patterns, which can be limiting in complex systems. AI-based anomaly detection learns normal system behaviour and identifies deviations that may signal emerging quality issues.

By continuously analysing production logs, metrics and traces, AI can detect early signs of degradation before they affect users. At the same time, it filters out operational noise, enabling QA and operations teams to respond more quickly and proactively to potential problems.

Predictive quality insights

AI also enables predictive quality analysis by identifying patterns in historical test and production data. This allows teams to anticipate where failures are most likely to occur after changes.

Predictive insights help QA teams focus testing and maintenance efforts on high-risk components before issues reach production. This shift from reactive to proactive quality management reduces operational risk, improves system stability, and lowers long-term maintenance costs.

Combining AI capabilities with human judgement

AI plays a critical role in post-delivery QA, optimising testing and detecting anomalies, as well as supporting test maintenance and knowledge management. It can automatically identify unreliable or obsolete tests, suggest updates following code modifications and map test coverage to critical business processes. As previously mentioned, AI also streamlines documentation, updates QA knowledge bases and accelerates the onboarding process for new team members. This ensures that quality practices remain consistent and scalable as systems evolve.

However, AI is a tool, not a replacement for human expertise. QA engineers are still essential for interpreting AI insights, stategy, assessing risks and evaluating business impact. They exercise judgement in exploratory and edge-case testing, making decisions that balance technical feasibility, user experience and operational priorities.

By combining the speed and data-driven capabilities of AI with human insight and contextual understanding, teams can achieve smarter, more resilient post-delivery QA, where quality is continuously maintained, scaled and improved.

Over to you

Post-delivery QA is a continuous process that ensures software remains reliable and high-performing long after its release. By combining production-aware practices with AI-driven test optimisation, anomaly detection and predictive insights, as well as human expertise, teams can prevent regressions and respond proactively to issues. This approach reduces operational risk, improves system stability and enables organisations to scale quality efficiently. Integrating AI into post-delivery QA enables teams to work smarter, focusing on critical workflows and user experience.

Get in touch with our experts to find out how AI-enhanced post-delivery QA could benefit your business.

The go-live stage marks the beginning of real-world validation, not the end of quality assurance. Production environments introduce real users, live data, unpredictable workloads and constant changes. Post-delivery QA helps to ensure ongoing stability and prevent regressions, while maintaining performance as the system evolves.

AI analyses large volumes of production data, test results and usage patterns in order to identify risks and prioritise areas of high impact. It facilitates intelligent test optimisation, anomaly detection and predictive analysis. This allows QA teams to respond more quickly, focus on the most important issues and increase their quality efforts without adding to their overheads.

No, AI enhances QA; it does not replace human expertise. It is up to QA engineers to interpret AI-driven insights, assess business impact, and make strategic decisions. Combining data-driven automation with human judgement creates a more resilient and proactive quality model.

AI-powered QA reduces operational risk by identifying anomalies at an early stage and preventing regressions. It improves system stability and performance through continuous monitoring and adaptive testing. Over time, it reduces maintenance costs while enabling faster and safer releases.

About the author

contact us

Focus on your core business, while we manage your IT infrastructure maintenance

our blog