Optimising the QA delivery phase: How AI enhances speed, accuracy & collaboration

Drawing on our experience of leading QA teams on multiple software delivery projects, we’ve seen the delivery phase evolve from a simple handover stage to a critical part of the development lifecycle that defines the success of a software release. Modern delivery models embed quality into every step of the process, allowing software to reach users faster without compromising stability or performance.

In this article, we’ll explore how AI can be effectively integrated into the QA delivery phase, from optimising test coverage and streamlining monitoring processes to improving team collaboration and examine how these practices are helping organisations to deliver higher-quality software more quickly.

Delivery as the core of quality execution

During delivery, several QA processes and practices become absolutely essential to maintain product quality while keeping pace with rapid development:

- Continuous validation of requirements – ensures that the product meets both documented specifications and the evolving business context, preventing fast-moving changes from distorting intended functionality.

- Robust regression testing routine – supported by test automation to quickly detect unintended side effects from frequent code updates.

- Stable and well-defined test environments – prevents environment drift, configuration inconsistencies, or deployment errors from masking real defects or generating false alarms.

- Close collaboration between QA, developers, and DevOps – facilitates immediate clarification, quick triage, and early defect detection before issues escalate further down the pipeline.

- Risk-based testing approach – focuses QA efforts on areas that bring the highest value when time is limited during delivery.

Applied together, these practices form a foundational set for effective QA during delivery, providing high quality without slowing down development.

See our Managed Services offerings!

Find out moreHow do we leverage artificial intelligence in QA delivery phase?

One of our recent projects involved developing an energy audit estimation tool designed to quickly provide approximate assessments of a building’s annual energy demand. Traditionally, a full audit requires a specialist to carry out an on-site analysis of construction details such as wall types, thermal bridges, window parameters and insulation layers. In contrast, the estimation tool enables users to input just a few key attributes: the building’s construction year, dates of window or door replacements, insulation information, and several high-level characteristics. The system then calculates an estimated energy demand with an accuracy of a few percentage points. What would take an auditor hours or days can be completed by the system in under 3 minutes.

As such assessments can be integrated with tools used by banks and financial institutions for mortgage decisions, renovationn loans or certification requirements, quality and reliability were essential.

AI played a significant role in accelerating the QA and development work on this project. The API behind the tool processes between 60 and 80 input fields, many of which have complex conditional dependencies – entering one value may require another, omitting one may disable subsequent options, and validation rules are non-linear. In practice, manually preparing the correct combinations of inputs for testing was time-consuming and error-prone. However, using AI tools, the team was able to automatically generate valid input datasets, create automation scripts, and run structured test scenarios that covered these dependencies.

The project demonstrates how AI can accelerate testing and implementation during the delivery phase, especially in systems with extensive validation logic, while careful oversight is still required to ensure accuracy, consistency, and compliance with business rules.

What are the biggest challenges when implementing AI in QA at the delivery stage?

Data & code security

When developers or testers send code, configurations, logs or realistic test data to AI platforms, the location of storage, processing method, and long-term risks are often unclear. Even with assurances from vendors, clients in regulated sectors such as finance, insurance, and public services may remain cautious. Clear guidelines, secure AI tooling and responsible AI use are essential for maintaining trust. Without these, AI capabilities may be limited or unusable due to confidentiality concerns.

The quality of AI-generated outputs

Although AI can accelerate test creation, analysis and automation, the quality of the output is not guaranteed. It may produce data containing errors or unsupported fields, misinterpret APIs or alter business logic subtly. Therefore, every AI-generated artefact must be carefully validated, particularly when working to tight deadlines, as teams may be tempted to rely too heavily on the convenience of AI.

Shift from implementation to validation

Artificial intelligence shifts the focus of QA from creating tests to validating artefacts. Verification can be as time-consuming as manual creation, especially when AI introduces ambiguities. QA specialists must review AI-generated data, scripts and scenarios to ensure they are accurate and aligned with business rules. While this shift changes workflows and the required skills, it does not necessarily reduce the workload.

Read more about our approach to QA PreDelivery stage >>

Risks associated with quality during the delivery

The delivery phase is one of the most critical moments in the entire software lifecycle. It’s the point where functionality is finalised, environments shift rapidly and the pressure to provide stable, production-ready increments is at its highest. What we’re seeing today is a blend of traditional delivery risks and a new layer of challenges introduced by AI-augmented development, all of which require far more rigorous oversight and validation.

Krzysztof Wezowski, Principal Java Consultant

Risk of requirement misinterpretation

One of the most common risks during the delivery phase is misalignment between the client’s expectations and the development team’s final delivery. This misalignment can be caused by ambiguous or incomplete requirements, changes introduced late in the cycle, assumptions made by developers or testers, or a lack of ongoing validation with stakeholders. Even if a feature is implemented correctly from a technical standpoint, it may fail to deliver the intended business value if the underlying context is not fully understood.

Although Agile practices such as frequent client touchpoints, iterative reviews and continuous clarification help to reduce this risk, they cannot eliminate it entirely, especially during the delivery phase when changes happen quickly and there is a lot of time pressure. In this environment, QA plays a crucial role as an additional layer of business validation, ensuring that the final product aligns with the written documentation, and with the real expectations or needs of the end users and the business.

Performance and scalability risks

Performance issues are another significant risk during the delivery phase. A feature may function properly in isolated tests yet malfunction under real load, degrade when interacting with other services, or behave unpredictably as data volumes increase. These risks often emerge late due to deprioritisation of performance testing, differences between test and production environments, and last-minute architectural and configuration changes.

AI-driven quality engineering can significantly improve control over these risks. Machine learning models can analyse historical test results, production telemetry, and usage patterns to identify components that are most likely to become bottlenecks under load. AI can also support intelligent test generation by simulating realistic traffic patterns and data growth scenarios, which are difficult to reproduce manually. By continuously analysing performance metrics and detecting anomalies early on, AI enables QA teams to validate not only functional correctness but also system responsiveness, stability, and scalability throughout the delivery cycle, thereby reducing the risk of late surprises or production incidents.

Deployment and environment quality risks

Many defects that occur during the delivery stage do not originate from the code itself, but from the deployment process and the condition of the environments. Issues such as deploying the wrong version, applying incorrect or outdated configurations, or experiencing environment drift can cause the system to behave differently to how it was validated by QA earlier. Partial deployments, inconsistent infrastructure or mismatched dependencies can further complicate testing and lead to unpredictable system behaviour. These problems often remain hidden until the post-deployment state is verified by QA. Validating the quality of deployments therefore becomes a critical task in the delivery phase, ensuring that the environment aligns with expectations and that the product functions reliably in its intended context.

AI-related quality risks

The growing use of AI in development and testing introduces new risks during the delivery phase. AI-generated artifacts, such as test data, payloads, or even code, may appear correct at first, yet they can contain subtle logical inconsistencies or incorrect assumptions. These models can invent fields, misinterpret schemas, or apply business rules inconsistently, which makes these issues difficult to detect through standard functional checks. Such defects often only surface after several testing cycles or when multiple components interact.

At the same time, AI can be leveraged to more effectively control these risks when applied in a structured way. Automated validation mechanisms supported by AI can cross-check generated artefacts against schemas, contracts, and historical patterns, highlighting anomalies and inconsistencies early on. AI-assisted review and traceability tools can also identify instances where generated outputs deviate from expected business rules or system behavior. When combined with clear governance, human oversight, and defined acceptance criteria, these approaches enable teams to leverage AI-driven acceleration while maintaining predictability, quality, and control throughout the delivery phase.

Wrapping up

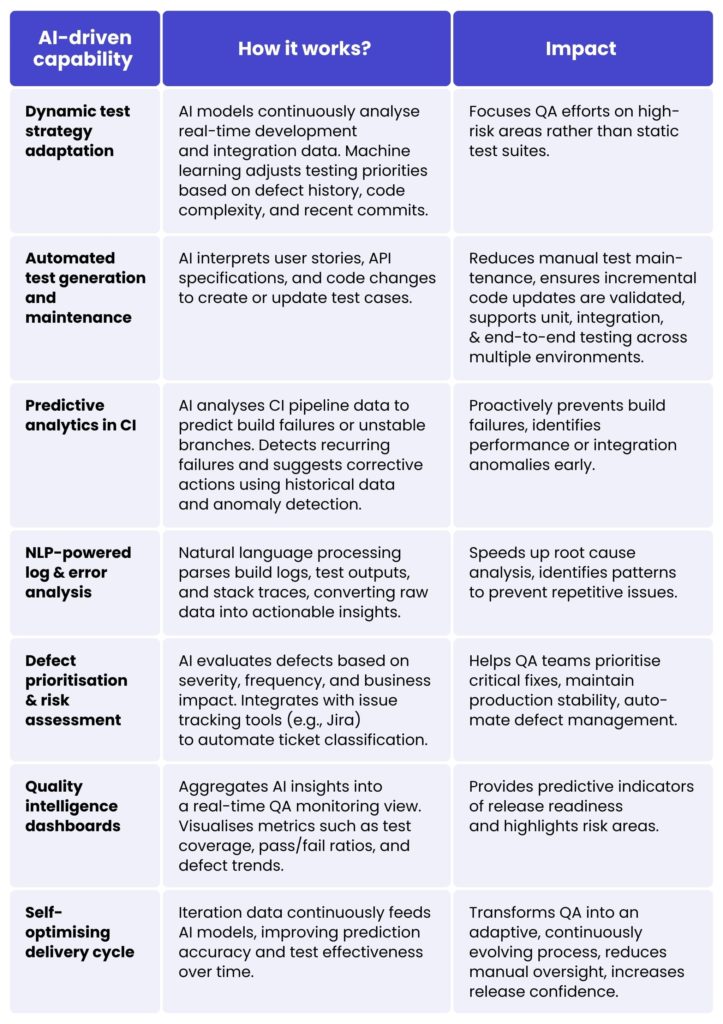

As software systems become more complex and release cycles accelerate, organisations must strike a balance between speed and uncompromising quality. Artificial intelligence offers powerful opportunities to streamline QA activities, such as test generation and maintenance, log analysis, failure prediction and cross-team collaboration support. However, AI is not a replacement for expertise. When paired with rigorous validation and well-structured delivery processes, it enables teams to release software that is faster and significantly more resilient.

Ready to optimise your QA delivery with AI?

If you’re looking to enhance your delivery processes, improve test efficiency or build a more resilient software pipeline, our specialists can help. We combine deep QA expertise with hands-on experience implementing AI-augmented delivery models across various industries.

Get in touch with our Managed Services expert via form below and explore how we can support your organisation in building faster, safer and smarter delivery workflows.

AI can accelerate repetitive tasks such as test generation, log analysis and regression testing. This allows teams to focus on more complex areas. It automatically adjusts testing priorities to areas of highest risk by analysing code changes and historical defect patterns. This results in faster validation cycles and quicker release readiness.

While AI is a powerful enabler, it is not a replacement for QA expertise. Although AI can automate large parts of the testing and analysis process, the results still require careful human validation to avoid incorrect assumptions or logical inconsistencies. The best results are achieved by combining AI tools with the oversight of experienced specialists, who ensure accuracy and compliance with business rules.

Although AI-generated artefacts may appear correct at first, they can contain subtle errors, unsupported fields or misinterpreted logic. Without proper governance, these errors can introduce new defects, particularly in complex systems with conditional dependencies. Therefore, ensuring rigorous validation and clear quality guidelines is essential to prevent AI from compromising release stability.

AI tools provide real-time insights into code quality, continuous integration pipeline trends, and defect patterns. This ensures that all teams are working with the same up-to-date information. This reduces the time spent on manual triage and speeds up communication around potential issues. Consequently, cross-team alignment improves and defects are resolved earlier in the delivery cycle.

Yes, as it is particularly effective in systems where the number of input combinations, business rules or dependencies is too extensive to test manually. It can automatically generate valid datasets, create automation scripts, and identify issues that traditional methods might overlook. The result is broader test coverage, fewer errors and faster progression through the delivery phase.

About the author

contact us

Focus on your core business, while we manage your IT infrastructure maintenance

Recommended articles