Multimodal HMI solutions: combining touch and voice with AI

Multimodal HMI describes a system that allows users to interact through more than one input or output channel, typically touch and voice. Instead of limiting control to physical buttons or touchscreens, multimodal interfaces enable speech, gesture and touch to work together, letting people communicate in the way that feels most natural at any given moment.

Touch remains unmatched for precision and direct manipulation, while voice provides freedom and accessibility. When combined, they balance each other’s limitations and create interactions that are intuitive, personalised and safer, particularly in contexts where attention and hands are already occupied.

In vehicles, this combination enhances both usability and safety. A driver can say, “Navigate to the nearest charger” or “Set temperature to 22 degrees” while keeping their eyes on the road, then make fine adjustments by touch when stopped. This flexible, adaptive interaction defines a new standard in user experience.

As digital assistants such as Alexa, Siri and Google Assistant have trained users to expect natural voice interactions, that same expectation now extends to vehicles and industrial systems.

For us at Spyrosoft Synergy, multimodal HMI represents the logical next step in creating interfaces that truly adapt to human needs rather than forcing users to adapt to technology.

Why AI and LLMs are changing how we interact

Voice interfaces have existed for decades, but until recently they were rule-based and rigid.

They recognised only predefined commands and failed when phrasing changed. Artificial intelligence has completely altered that landscape.

Modern systems powered by Large Language Models, LLMs, can interpret intent, context and emotion. They understand variations in phrasing, even slang or incomplete sentences. When a driver says “I’m cold,” the assistant doesn’t need explicit numbers. It simply increases cabin temperature. If they later add “Only for me,” it applies the change to the driver’s zone only.

This flexibility means users no longer need to memorise command syntax. They can talk as they would to another person. LLMs maintain dialogue context across turns, enabling follow-up questions and compound requests. For example, “Find a good sushi restaurant on the way home and book a table for two” triggers a sequence of tasks: searching, filtering, reserving and setting navigation, all from one spoken request.

Automakers are already adopting such systems.

Mercedes-Benz, for instance, has integrated ChatGPT into its in-car assistant to handle more conversational prompts, understand open-ended questions and provide detailed answers. The result is a new kind of human–machine partnership: one that listens, interprets and assists proactively rather than passively executing commands.

We use similar natural-language processing technologies in our AI and Machine learning projects to build assistants capable of understanding nuance and adapting over time, bringing truly intelligent interfaces into the driver’s seat.

Building the architecture: how touch and voice work together

Behind every intuitive multimodal experience is a layered architecture that orchestrates both input modes seamlessly.

The system starts with input and trigger detection. A wake word “Hey BMW” or a push-to-talk button signals the start of speech input, while the touch layer registers gestures and taps. Both channels feed into a central interaction manager that coordinates timing and context.

The speech-to-text (STT) engine transcribes spoken words into text with minimal latency. High-accuracy cloud models are often combined with smaller on-device engines that handle frequent commands offline.

Next comes Natural Language Understanding (NLU) or direct LLM processing, which extracts the user’s intent and relevant parameters. For example, “Set the cabin to a comfortable temperature and play jazz” produces two intents: adjusting climate and starting music playback.

The dialogue manager then uses session context to decide the best response, asking for clarification if needed. The orchestrator maps intents to specific system actions through APIs that interface with vehicle functions. Finally, the feedback layer confirms success through speech, visual animations or tactile feedback.

A well-designed architecture uses a hybrid approach: fast on-device processing for routine actions and cloud intelligence for complex reasoning. That way, commands like “Turn on the wipers” work instantly even without connectivity, while broader queries, like “What’s the weather like along my route?”, benefit from cloud data.

Managing context for smarter interaction

The most human aspect of any AI interface is its ability to understand context.

Context allows the system to interpret ambiguous language, remember recent exchanges and adapt responses to the situation.

There are several key layers of context:

- Session context keeps track of what has been said during the current conversation.

User context remembers individual preferences such as seat position, climate settings and music style.

- Vehicle context includes state data – whether the car is in motion, which seats are occupied, or what mode it’s in.

- Environmental context accounts for external factors such as location, weather and time of day.

Together, they enable behaviour that feels natural. If the passenger says “I’m freezing,” the system increases the temperature on their side, activates the seat heater and replies, “I’ve raised the passenger temperature to 24 degrees and turned on your seat heater.”

Managing context requires careful balance. Retaining too much information can cause confusion or privacy risks, while too little breaks continuity. Best practice is to maintain short-term memory for dialogue and store long-term preferences locally in a secure, encrypted form.

Performance and optimisation – why speed matters

No matter how sophisticated the AI, performance defines user satisfaction. In interactive systems, delays beyond two seconds feel slow and interrupt thought flow.

To meet these expectations, engineers optimise every stage of the voice pipeline. Wake-word detection must respond in under 200 milliseconds. Speech-to-text conversion should finish within half a second. The overall round-trip, from command to confirmation, should stay below one and a half seconds for simple actions.

This is achieved through on-device inference for core commands, model optimisation (distillation, quantisation and pruning) to reduce compute load, and dynamic prioritisation that temporarily reallocates resources from background tasks to voice processing.

Equally important is perceived responsiveness. Even when an action takes longer, immediate feedback such as a listening tone or short acknowledgement (“Working on that…”) reassures users that the system is active.

After deployment, continuous monitoring ensures performance doesn’t degrade. Engineers track metrics such as intent accuracy, latency and error recovery rates. These insights feed back into retraining cycles, forming an ongoing improvement loop, a process similar to how we manage model optimisation in our AI deployments.

Integration of speech and language models

Integrating voice and language models within the HMI pipeline ensures that speech flows smoothly into action. The chain runs from wake-word detection to speech transcription, language understanding, intent mapping and API execution. Each link must use well-defined interfaces so individual modules can evolve independently.

Testing under realistic driving conditions is critical. Cars are challenging acoustic environments: engine vibration, wind and passenger chatter can interfere with recognition. Engineers must tune microphone arrays, apply noise suppression and test across a range of accents and languages.

Equally important is synchronisation between modalities. When the user says “Increase temperature,” the visual UI should update instantly, showing confirmation. If an instruction is unsafe or not permitted, the assistant should explain why rather than fail silently. Transparent, human-like communication builds trust and encourages continued use.

Benefits of multimodal interaction

For users

Multimodal interfaces deliver freedom of choice. Drivers can control the car hands-free when attention is critical, or use touch when precision matters. This duality makes interaction both safer and more engaging.

Accessibility improves too. Voice helps users with limited mobility or visual impairments, while touch supports those who prefer not to speak or are in noisy environments. The system adapts to the person and the context, not the other way around.

Personalisation further enhances comfort. The assistant can recognise who is driving, recall saved profiles, or suggest routes and music based on routine patterns. Continuous updates add new features, ensuring the experience keeps evolving throughout the vehicle’s lifetime.

For manufacturers

For OEMs, multimodal HMI is both a differentiator and a strategic asset. It strengthens brand perception as forward-thinking and customer-centric, while providing tangible benefits such as improved user satisfaction and reduced support demand.

Usage data, when collected ethically and with consent, offers powerful insight into how people use in-car systems. It helps identify underused features, refine interfaces and design future functions more efficiently.

At the same time, advanced HMIs open up new business opportunities – from voice-activated services and infotainment subscriptions to seamless integration with connected ecosystems.

Privacy, security and compliance

Because voice assistants continuously listen for activation cues, privacy is paramount. Users must know when data is collected, what is stored and how it is protected.

A responsible system processes as much as possible on-device, sending only the minimum data required to the cloud – always through encrypted channels. Personal identifiers are removed or tokenised to preserve anonymity.

Visual indicators, such as microphone icons or status lights, should make it clear when the system is listening. Sensitive actions like purchases, account access or factory resets require explicit confirmation.

Compliance with data-protection frameworks such as GDPR is mandatory, but true trust comes from transparency. Allowing users to view or delete their data at any time demonstrates respect for privacy and reinforces long-term loyalty.

Avoiding common pitfalls

Even well-intentioned projects can fail if critical details are overlooked. Relying entirely on cloud connectivity leads to downtime in poor coverage areas. Ignoring diverse accents or real-world noise results in recognition failures. And collecting personal data without clear consent risks damaging user trust and regulatory penalties.

Successful systems anticipate these issues. They maintain offline fallback capabilities, provide consistent feedback, and gracefully handle misunderstanding. When uncertain, it’s better to ask “Did you mean…?” than to execute incorrectly.

Equally, users should always have the option to revert to touch input. Voice should enhance usability, not replace established controls.

Case study: Wavey

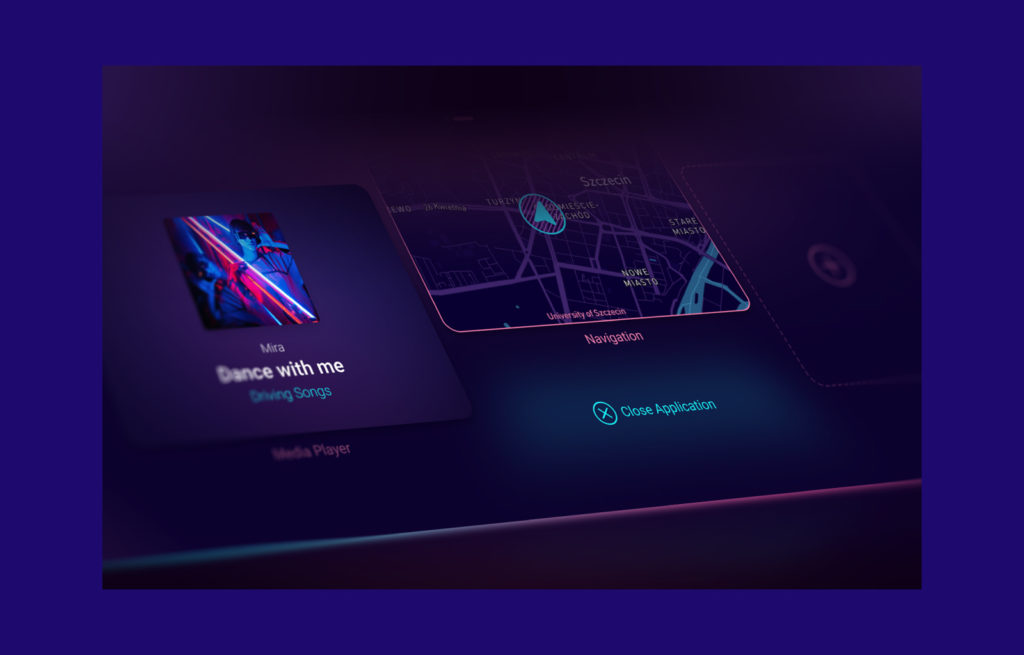

Our Wavey prototype demonstrates how voice and touch can coexist in a single automotive environment. Built on a Qt-based microHMI platform, it integrates AI-driven voice control with responsive touch interfaces for climate, navigation and media.

Users can issue commands such as “I’m too hot” or “Play some jazz,” and the system responds instantly while updating the display. Early user tests showed faster task completion and higher satisfaction compared with touch-only interfaces. Participants described the assistant as “natural”, “human-like” and “less distracting”.

The proof of concept also highlighted valuable lessons. Cabin noise and strong accents required acoustic tuning and additional training data. Cloud latency prompted the integration of a hybrid model with local fallback. And subtle UI feedback, like visual confirmations and brief spoken acknowledgements, proved crucial for user confidence.

Wavey validated the potential of multimodal HMI: intuitive, efficient and adaptable. It also reinforced the importance of multidisciplinary collaboration between AI engineers, UX designers and automotive specialists, a hallmark of our development approach.

Where we go next

Multimodal HMI is redefining how people interact with technology. By merging touch and voice, supported by AI, it delivers experiences that are not only convenient but also human-centred and safe.

Touch provides precision and visual control; voice adds speed and natural conversation. Together, they make complex systems simpler to use. For developers, the path forward is clear: start with a few high-impact scenarios, pilot them in real-world conditions, monitor results, and expand step by step.

Equally important is transparency around privacy, continuous optimisation and an iterative design process that keeps the user at the centre. When these elements come together, technology becomes invisible, a silent partner that listens, understands and acts intuitively.

Multimodal interaction isn’t just the future of vehicle UX. It’s already here, and it’s setting the pace for how people will engage with intelligent systems across industries.

About the author

CONTACT US