MCP vs A2A vs LangChain Agent Protocol: How they shape agent interoperability

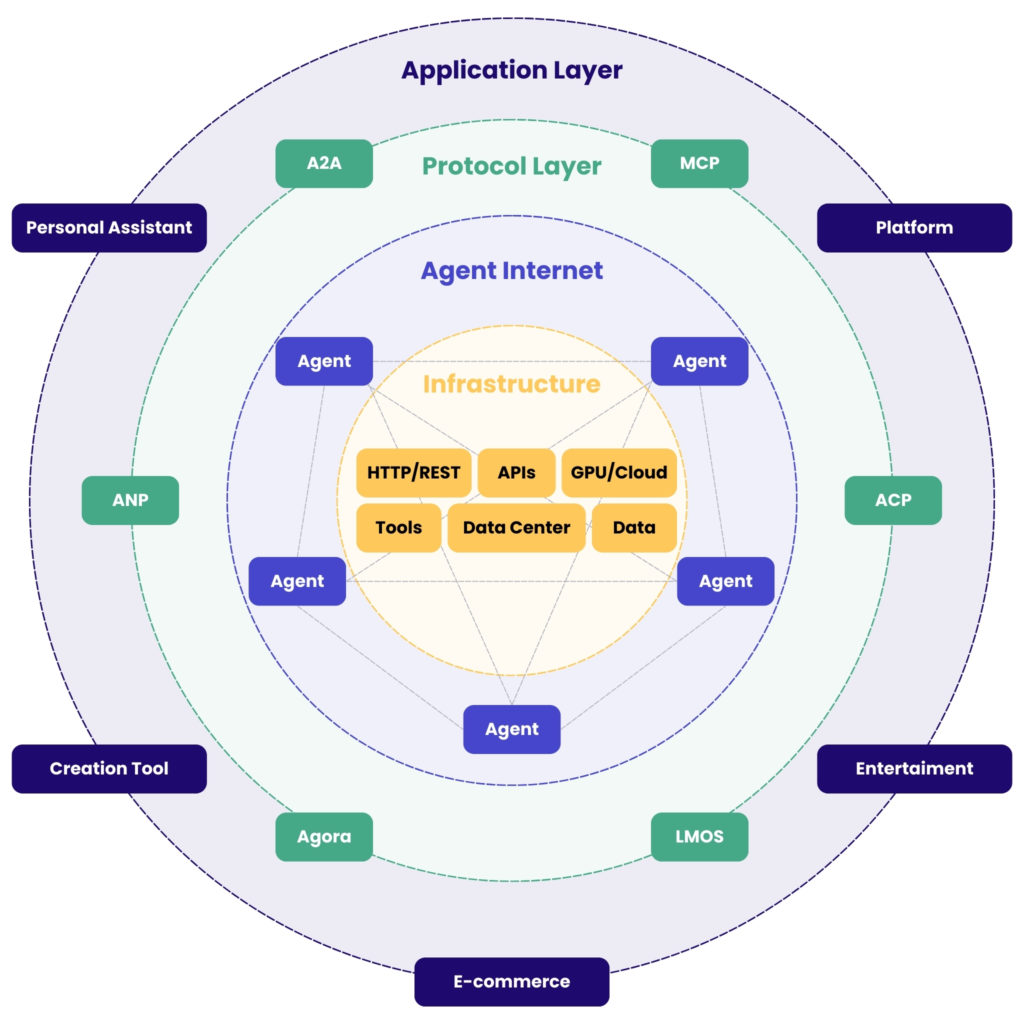

AI agents are rapidly evolving from isolated tools into active participants in enterprise workflows. As organisations deploy more agents – each specialised in tasks like analysis, routing, compliance, or communication – the need for interoperability becomes critical.

Three major open standards dominate this landscape today:

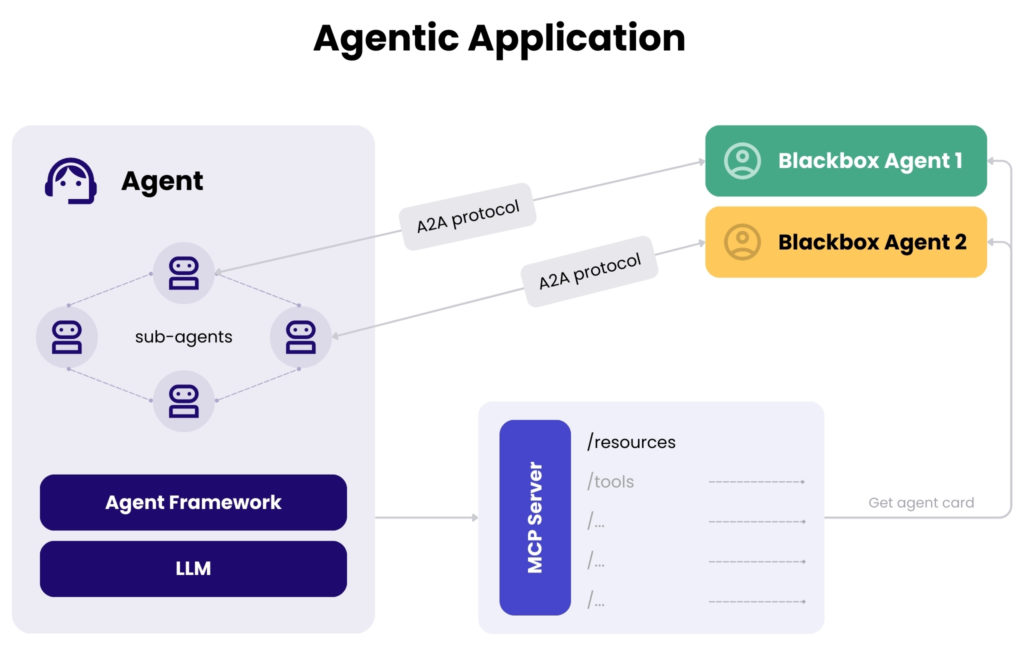

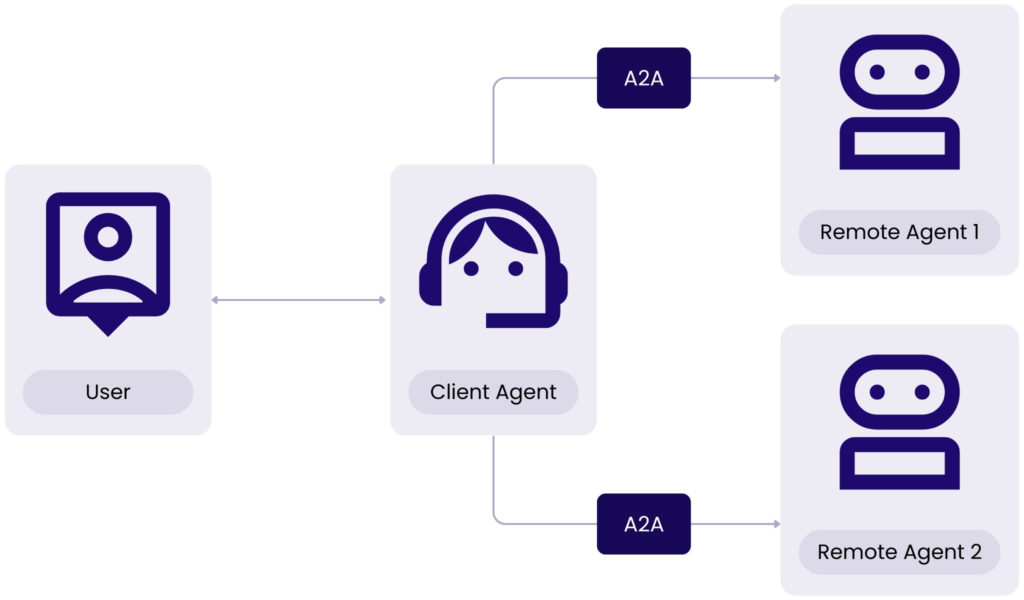

- Google Agent2Agent (A2A): A2A allows AI agents to communicate, delegate tasks, and collaborate directly with each other across systems.

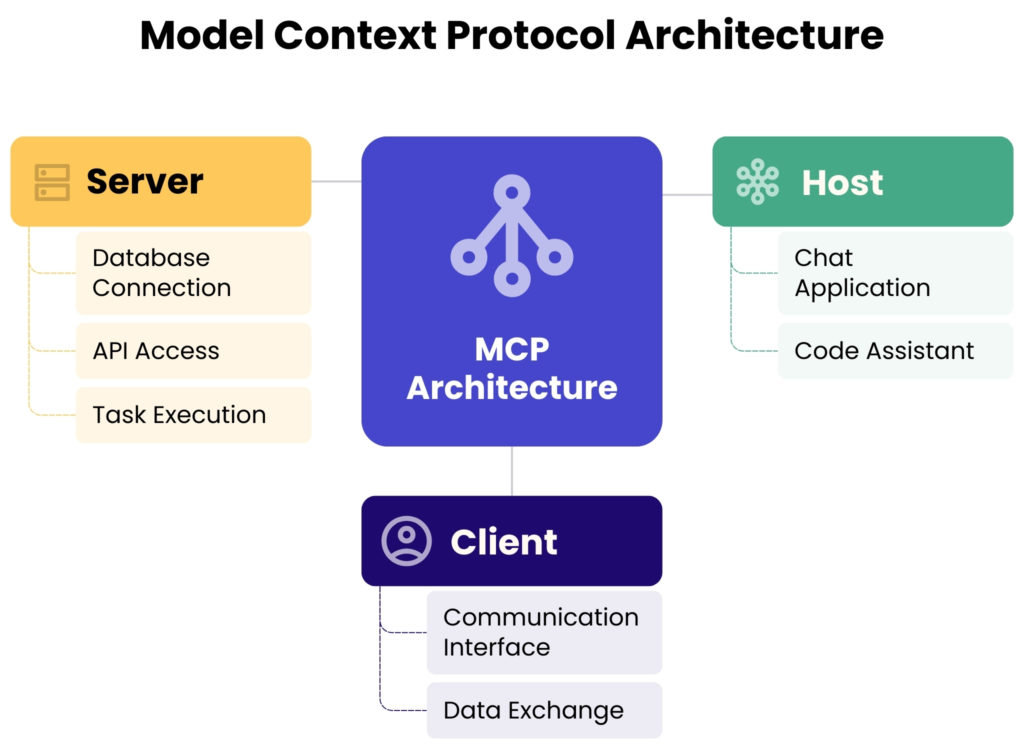

- Anthropic Model Context Protocol (MCP): MCP standardies how AI agents access external tools, applications, and enterprise data.

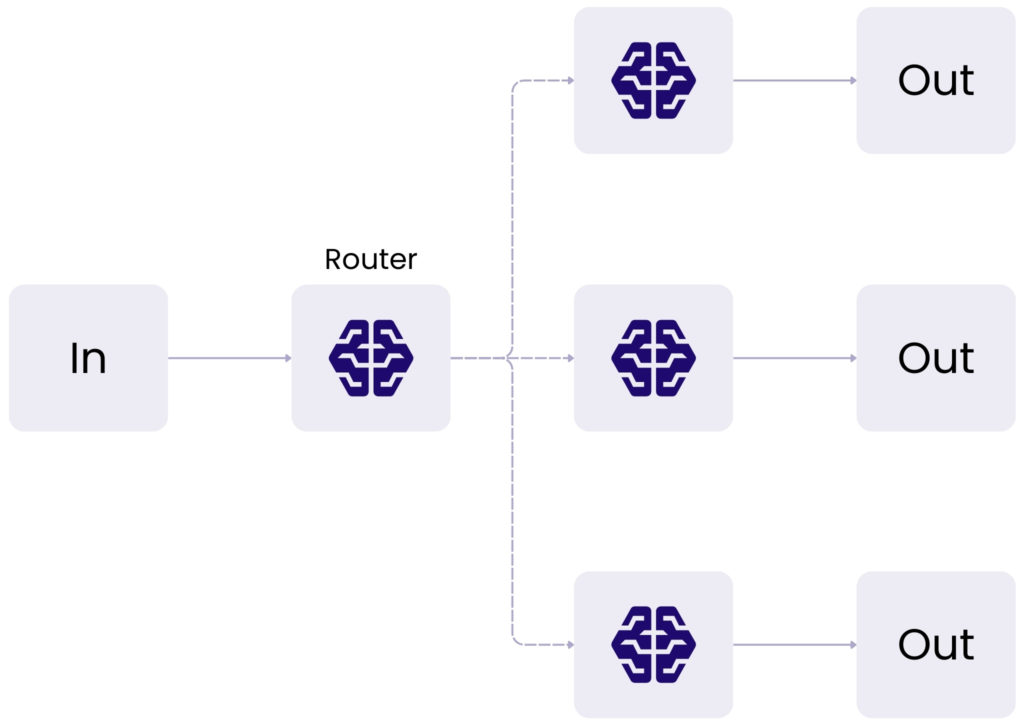

- LangChain Agent Protocol: LangChain’s Agent Protocol defines a unified REST API for deploying and invoking AI agents as services.

Why each protocol exists

Why A2A exists: Agent-to-agent collaboration

As companies move toward multi-agent systems, agents must be able to talk to one another, share information, and pass work along. A2A provides the communication fabric that makes this possible.

It uses standard web technologies such as HTTP and JSON-RPC to enable task delegation, capability discovery, and real-time status updates. In practice, A2A functions like a messaging layer for AI agents, ensuring they can coordinate effectively without custom integration work.

Think of it as a messaging layer for AI agents.

Why MCP exists: Agent-to-data integration

Large language models do not naturally have access to enterprise systems.

MCP solves this by acting as a universal adapter, allowing an agent to pull data, read files, access databases, or execute actions using a consistent schema.

With MCP, any tool can expose its functionality through a simple JSON-RPC interface, and any agent can use that interface without bespoke integration code. It effectively gives agents the “eyes and hands” needed to operate within enterprise ecosystems safely and reliably.

Think of MCP as giving agents eyes and hands inside your organisation.

Why agent protocol exists: Agent-as-a-service deployment

Enterprises need a predictable way to run and manage agents in production environments. LangChain’s Agent Protocol standardises how agents receive tasks, stream results, persist state, and expose metadata about their capabilities.

It also introduces concepts such as threads, runs, and memory that make multi-step interactions more robust. This protocol turns an agent into a reliable, API-driven service that can be invoked by any system: frontend, backend, or another agent.

Think of it as the operational wrapper that makes any agent “plug-and-play.”

Core differences explained simply

| Protocol | Solves this problem | Best for | Simple analogy |

| A2A | Agents talking to other agents | Multi-agent collaboration workflows | Slack for agents |

| MCP | Agents accessing tools + data | Enterprise integrations | USB-C for external systems |

| Agent Protocol | Agents being deployed as services | API-driven agent access | API spec for agents |

When to use which (the rule of three)

A2A is the right choice when multiple agents need to collaborate or when tasks must move fluidly between them.

It supports role-based delegation, for example, when a planning agent engages a research agent or when a sales agent hands over work to a marketing agent.

MCP becomes essential when an agent must interact with internal systems such as CRMs, databases, cloud storage, or operational logs. Instead of building custom integrations for each system, MCP provides an abstraction that works everywhere in the same way.

Agent Protocol is ideal when agents must be invoked as services. Applications, orchestrators, and other agents can call them in a predictable, standardised manner using well-defined endpoints. This reduces operational complexity and makes production deployments more maintainable.

How they fit together in a real enterprise

A robust AI agent architecture will often use all three:

- MCP for tool and data access

- A2A for agent-to-agent task coordination

- Agent Protocol to expose each agent as a standardised service

A simple workflow example:

- User requests a report from an analytics agent.

- The analytics agent uses MCP to fetch up-to-date CRM and financial data.

- It uses A2A to delegate visualisation tasks to a chart-generation agent.

- Each agent is deployed via Agent Protocol, so the UI can call them consistently.

- Results are streamed back to the user.

This combination gives enterprises flexibility, reusability, and future-proof architecture.

Make sure you check our article about agentic enterprises, how they implement AI, and where they struggle.

Case study: from Figma design to application skeleton

A real-world example of this architecture comes from using MCP to automate the transformation of UI designs into runnable code.

Through an MCP-enabled integration with Figma, an agent can access a project URL and automatically interpret screens, components, and visual styles. It reads the structure of the design, including color palettes, layout hierarchies, and reusable patterns, and then generates complete QML components. These include a singleton defining the project’s color scheme and individual files for each detected control.

The result is an end-to-end workflow where a graphical design becomes a ready-to-use application skeleton enriched with a suggested main QML file and configuration guidance. Thanks to MCP, the entire process can be executed through a single command, allowing multi-step tasks, such as design analysis, component extraction, and code generation, to unfold seamlessly.

This significantly accelerates UI development and reduces the manual work typically required to convert designs into functional code.

Learn more about our artificial intelligence and machine learning services.

Summary: three complementary building blocks

A2A, MCP, and LangChain’s Agent Protocol each address a different part of the agent ecosystem. A2A ensures that agents can communicate and collaborate. MCP gives them access to tools and enterprise data. Agent Protocol provides a consistent API to deploy, manage, and interact with agents in production environments.

Rather than competing, these standards work best when combined. Together, they form a strong foundation for building scalable, interoperable, and future-proof AI systems that can operate reliably within enterprise constraints.

About the author