Mastering the skies: unveiling the software core of drones

From filmmaking through deliveries to helping in emergencies, drones are becoming increasingly popular in day-to-day jobs and activities. The demand for specialised devices increases as the UAV (unmanned aerial vehicle) market experiences exponential growth and finds diverse applications in various industries. And the need for an intuitive and user-friendly human-machine interface (HMI) is becoming more and more important. Let’s look at how the drone’s software is designed to ensure smooth communication between the device and an operator. In this article, I will introduce the types of drone applications and the essential elements needed to create a functional drone system.

What is a human-machine interface in a drone?

A human-machine interface (HMI) is a system that enables interaction between a user and a device. In the context of drones, HMI plays a crucial role in bridging the gap between human operators and the complex systems that govern drone functionality.

The HMI translates the user’s control inputs into signals the drone’s flight controller can understand and act upon while also presenting the drone’s status and sensor data to the user in an understandable way. The significance of HMIs in drones lies in their ability to enhance user experience, improve control precision, and contribute to overall operational safety and efficiency. But to make this possible, it is important to provide all the essential parts directly on the drone and to ensure communication between the drone and the HMI.

Types of drones, their purpose and use in various industries

Let’s start with a short overview of drone applications in different industries.

• Consumer drones – mostly small devices used for video recording, photography or hobby flying.

• Commercial drones – medium-sized drones with enhanced features used for filmmaking, property surveying (real estate), crop monitoring (agriculture), and others.

• Industrial drones – robust and specialised for industrial use devices used for infrastructure inspection (bridges, pipelines), environmental monitoring, or construction site surveying.

• Military drones – ranging from small reconnaissance UAVs to large, armed drones used for surveillance or combat missions.

• Delivery drones – agile drones used for food or package delivery.

• Medical drones – designed for transporting medical supplies, used to rapidly deliver medicines and medical equipment to remote or inaccessible areas.

• Search and rescue drones ¬– drones equipped with advanced sensors and cameras to locate missing persons or survey disaster-stricken areas.

It’s worth noting that there are numerous other applications for drones beyond those mentioned above. However, the general classification into different types of drones allows us to appreciate their versatility and the fact that they share common functionalities. These functionalities include route and waypoint planning, camera surveillance, and access to basic information such as altitude or speed.

Furthermore, more advanced features and applications are available only in specific drone models. For instance, some drones are equipped with thermal imaging, enabling observation in conditions of low or no visibility. Others may utilise echolocation or LiDAR for precise distance measurement and object identification.

In addition, some drone models allow for package delivery, which finds application in logistics. There are also drones capable of grasping objects, which can be useful, for example, in rescue operations.

if you want to explore the use of drones in business further, read the piece Commercial drones: trends, challenges, solutions. An interview with Marieta Weglicka and Piotr Wierzba.

An overview of essential drone system components

Each of these drones has similar basic functionalities. How they should be implemented to work properly is described later in this article. The HMIs must be easily configurable and handle different sensors and cameras. As for the drone’s control, it should be user-controlled in real-time but also have an autopilot option implemented.

Below, I have described the elements of building a basic drone system, with the possibility of further project development in any direction.

Ground control station (GCS)

In drone technology, a human-machine interface is often embodied in an application that functions as a ground control station (GCS). This application can be a mobile app or a system that runs on dedicated hardware, serving as the nerve centre for drone operations.

GCS serves as a hub for planning flight routes and automating drone operations. It is essential for overseeing and managing the drone’s mission. Users can pre-program routes, set waypoints, and define mission parameters, allowing drones to execute tasks with precision. This level of automation enhances efficiency, particularly in industrial and professional settings.

Of course, not everything can be planned and automated, so manual control remains a critical aspect of drone operations. The GCS must be seamlessly integrated with peripheral or integrated controllers, ensuring the operator can take manual control when needed. The controller itself can take various forms. It might be a standalone physical joystick or a control panel inside a mobile application, allowing users to pilot the drone using a touchscreen interface. It is also possible to control a drone through gestures or body movement, and this solution is popular mainly among POV drones. This diversity accommodates different user preferences and operational requirements.

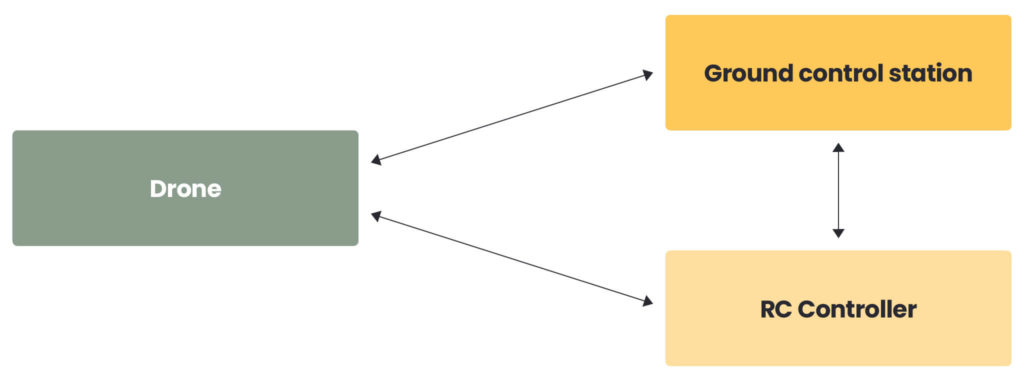

The diagram below shows a general architecture for the basic drone system that includes only the elements mentioned above. The diagram will be extended in the next parts of the article.

Depending on the requirements, the GCS can be made available on dedicated hardware designed solely for interfacing with the drone. However, solution like this requires the design of such a device, and the user must have continuous access to it if they want to operate the drone. In the case of damage, this becomes impossible.

Ground control station as an application

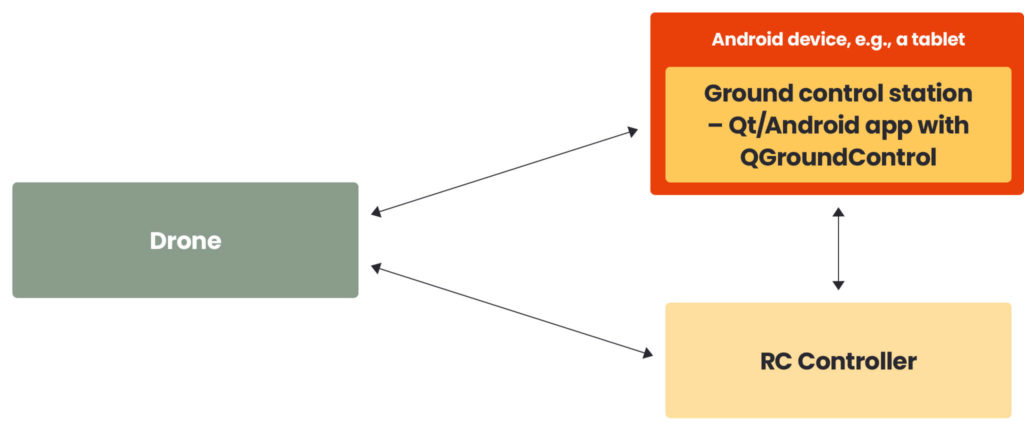

A good alternative is to implement a ground control station as an application on popular mobile operating systems such as Android or iOS. These devices are ubiquitous, and users can use the GCS with any supported hardware.

Ready-made solutions are already available in the market and can be modified to support more advanced use cases. An example is QGroundControl, developed in Qt. QGroundControl implements the most popular communication protocol, MAVLink, and supports autopilots like PX4.

In such a scenario, it seems reasonable to leverage an existing, well-documented solution that allows for customisation according to specific needs. In that case, the diagram would look like this:

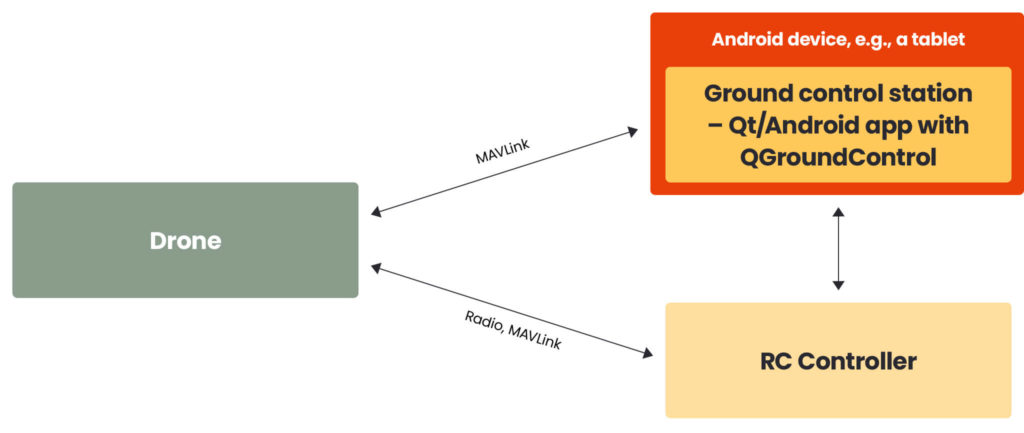

But some essential parts are still missing. We must ensure real-time communication between the GCS and the drone in both directions. This is where we introduce MAVLink.

MAVLink protocol

The MAVLink protocol ensures two-way communication between the ground control station, the drone, and the onboard drone components. The protocol adopts two distinct design patterns for message handling: publish-subscribe and point-to-point. Messages are defined using XML files, with a standardised set readily available. However, users can also define a custom set of messages, referred to as a MAVLink dialect. For basic use cases, the standard message set is typically sufficient. The MAVLink configuration must be the same on the UAV and GCS sides.

Of course, we must provide telemetry modules on both the drone and GCS to enable the communication link. Common telemetry hardware includes radio modules (e.g., RFD900x) or Wi-Fi modules.

Once the connection is established, the drone must respond to the messages it receives. At this point, we can cover some of the most important elements on board the drone.

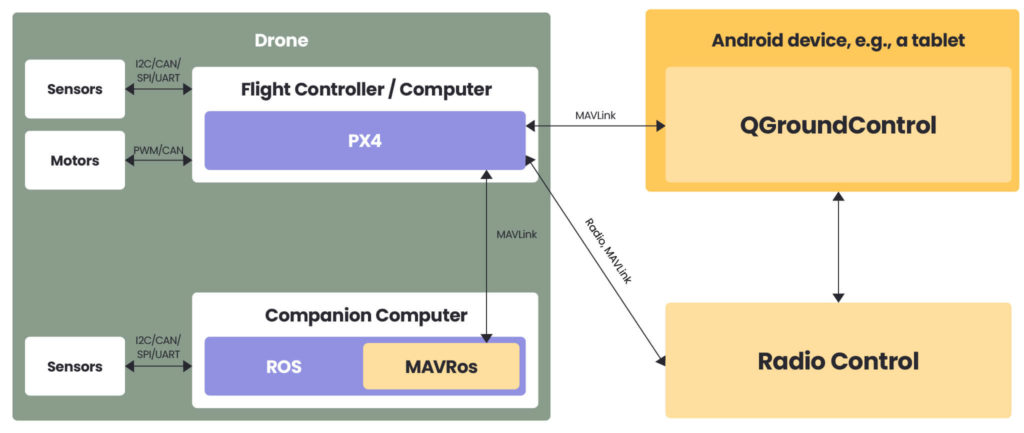

Flight controller and companion computer

A flight controller is like a drone’s brain. It’s the most crucial and critical component that interprets user commands, processes sensor data, and generates control signals to adjust the device’s orientation, speed, and position. A flight controller (FC) serves as the central computing unit responsible for managing and controlling the various aspects of a drone’s flight.

The primary responsibilities of FC are:

• drone orientation/velocity/position/odometry control,

• tracking flight and trajectory planning,

• sensor fusion,

• communication with peripherals,

• user interface integration,

• real-time processing.

In the case of more advanced operations like object avoidance, collision prevention or safe landing, it can be necessary to delegate some of the work to another computer, called a companion computer (CC). CC can do jobs that need more processing power, like analysing the camera output to, for instance, stop the drone in front of an obstacle. The CC and FC typically communicate using the MAVLink protocol.

Flying a drone without FC is impossible, but how can the drone fly on autopilot? Autonomous drones have the standard autopilot like PX4 integrated into the flight controller.

PX4 autopilot

PX4 is a robust open-source autopilot. It’s designed to be versatile and can be used in various types of vehicles, from multicopters to fixed-wing aircraft and even ground vehicles.

PX4 provides a software stack for controlling the vehicle’s hardware, including sensors, motors, and more. PX4 flight stack is controlled by a flight controller.

PX4 and ROS 1/ROS 2 communicate over MAVLink, using the MAVROS package to bridge ROS topics to MAVLink messages. The recommended approach in the ROS 2 setup is to communicate through the PX4-ROS 2 bridge, an interface that provides a direct bridge between PX4 uORB messages and ROS 2 DDS messages/types. It effectively allows direct access to PX4 internals from ROS 2 workflows and nodes in real-time. To implement this solution, the flight controller should be upgraded to at least the PX4 1.14 version.

To sum up

These components (and many more) must work together to allow the user to interact with a drone. I hope this article introduced you to the essential elements of a drone so you know where to start and which parts to focus on as you begin your adventure with building unmanned device systems.

Are you building a drone solution?

Our robotics and human-machine interface experts are ready to support the development of your drone project. Find out more about our robotics services here.

About the author

Contact us