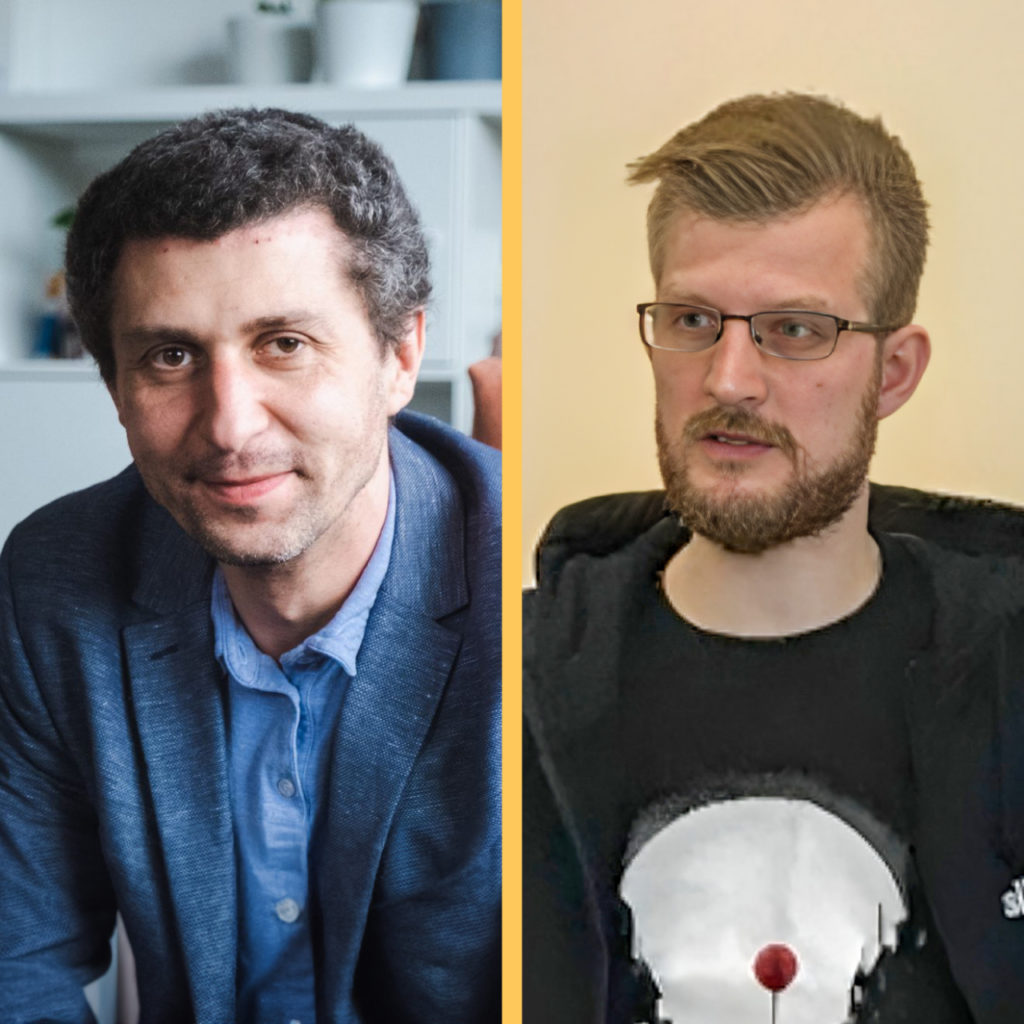

Grzegorz Trawiński: who can become a pentester?

Looking at my current team, I can see a crew of very different personalities. I can’t imagine that any of these people woke up one day saying that they would start hacking.

Check our pentesting training from the Spyrosoft Academy.

I work with a former software developer who specialises in creating solutions based on the so-called LAMP stack (open-source building blocks of Linux operating system, Apache HTTP Server, MySQL for database management and PHP).

A few people used to specialise in manual testing or automatised QA. There’s a guy in our team who never graduated from a masters or engineering studies yet only needs 5 minutes to start talking to you about manipulating Windows processes and memory in order to create a new terminal session with NT AUTHORITY\SYSTEM. There’s also a former blue-teamer working for us – a while ago he was doing overnight shifts in the light of 12 screens at once showing him infrastructure breach alerts.

I myself, have worked as a .NET developer before and this experience made things so much easier for me now in my current position, but in the end, your background doesn’t matter too much.

What are penetration tests?

After all, penetration tests are about looking for and identifying security errors in applications and IT systems. These errors can affect their security and they’re often unintentional, being placed in the system by accident next to a genuinely innocent functionality that works flawlessly.

Who can become a pentester?

Everyone. Who can become an expert in this field? Only the best ones.

Kid you not – to be honest, to become a pentester you need to be immeasurably patient, not give up easily and continuously expand your knowledge of various technologies.

When I think about pentesting, it’s hard not to imagine a victorious trio of a developer, a QA tester and a pentester. The first one wants the functionality to work as planned when implementing it and for it to be performing in all use cases and be as error-proof as possible. The second one carefully verifies it taking on the perspective of an end-user and checking whether the functionality does what it was supposed to do, whether it provides value and if it works flawlessly. The third one analyses where the first one slipped when implementing the functionality and the second one missed this mistake and how it can be used to hackers’ advantage.

How does this collaboration work in practice?

To give you an example, a CMS system can have the functionality of displaying subpages based on the p parameter. The tester may have carefully checked if all subpages are displayed correctly in an app, and with the developer, they decided to take a break. The pentester can then analyse that it’s possible to change the p parameter in a way that would allow for displaying any unexpected subpages.

After a few trials and errors, they can then find a non-trivial path traversal class vulnerability requiring double encoding of dots and a slash (just because we have additional nginx proxy, %252E%252E%252F). It may turn out that we can display the contents of /etc/passwd file, but not of /etc/shadow file (due to the access policy being configured correctly). After a few coffees, we can spot an Easter egg left by a (probably) drunk admin in one of the folders — a shadow.bak file. This will cause us to put our bottle of chilled champagne away and implement a strong password policy flawlessly and after a few days not even john-the-ripper nor hashcat will be able to crack the password’s hashes. By not giving up and literally tearing the application apart, we would finally reach the configuration file and there it is: a 256-bit character line that is required to properly create a HMAC signature of a JWT token. Thanks to it, the pentester would now be able to create the correct JWT token and use it to verify their ID as any app user, including an admin.

I guess it’s nice to read about errors such as this one, but they’re rather rare. Challenges such as Capture the Flag or Hack The Box can be equally misleading because you’re in fact set for success. These prepared systems and applications have errors in them, and you know it, so you’re poised to find them.

These rules do not apply to real-life application where no one has intentionally left bugs in and what’s more, someone has taken care of securing the app. During our latest training, trainees received a virtual machine with a web app that had a few errors in it. Based on it, they had to run a pentest and prepare a report. Then they were tasked with pentesting a real-life web application developed by one of our project teams that were set to be hosted for one of our customers. I remember their questions: ‘Greg, how we suppose to test it, when it is secured?’ – well, just as was the case with the first app, but you might not find any vulnerabilities.

What are the characteristics of this position?

Working as a pentester is undoubtedly difficult, arduous at times and it may seem like it brings no results. It requires specialised knowledge and technical skills, with attention to detail and promptly connecting the dots being must-haves. Not to even mention out-of-the-box thinking. In the end, this job is exceptionally satisfying, especially when it results in identifying critical security failures, even if it happens once every dozen pentests.

And the list of possible errors is still growing. I’m sure you’ve heard about SQL Injection or Buffer Overflow – but are you familiar with Reflected File Download, Web Cache Deception or HTTP Request Smuggling? Today the application is secure, and tomorrow – due to a new class of errors – not anymore.

I’d like to invite anyone who likes brain puzzles or issues that seem to be unsolvable, and anyone who’s passionate about cybersecurity to take part in a pentesting course where I’ll share all I know about the most significant vulnerabilities that might be found in modern web apps and where we’ll start developing your pentesting toolkit.

About the author