MicroHMI architecture for Android Automotive

The modern cockpit has become one of the most complex software environments in any consumer product. A single infotainment stack must manage navigation, climate control, voice assistance, connectivity, entertainment, driver notifications, and safety features – all within milliseconds and under strict reliability standards.

For years, many OEMs built these systems on custom Linux stacks.

They worked, but scaling them was painful. Integrating new functions or redesigning the UI often meant touching the entire system. Every change required long integration and validation cycles.

Android Automotive OS (AAOS) has changed this dynamic.

With its modular structure and mature developer ecosystem, AAOS allows carmakers to design infotainment and cluster systems that can evolve over time, not just be frozen at SOP. It offers over-the-air updates, secure app isolation, access to Google services, and familiar Android tooling.

But modular software still needs modular design. That’s where MicroHMI architecture comes in.

Why does MicroHMI matter?

The automotive HMI used to be a monolith – one codebase, one development flow, one large team. As vehicles move towards software-defined architectures, this approach no longer scales. Dozens of features must be developed, tested, and maintained in parallel by distributed teams and suppliers.

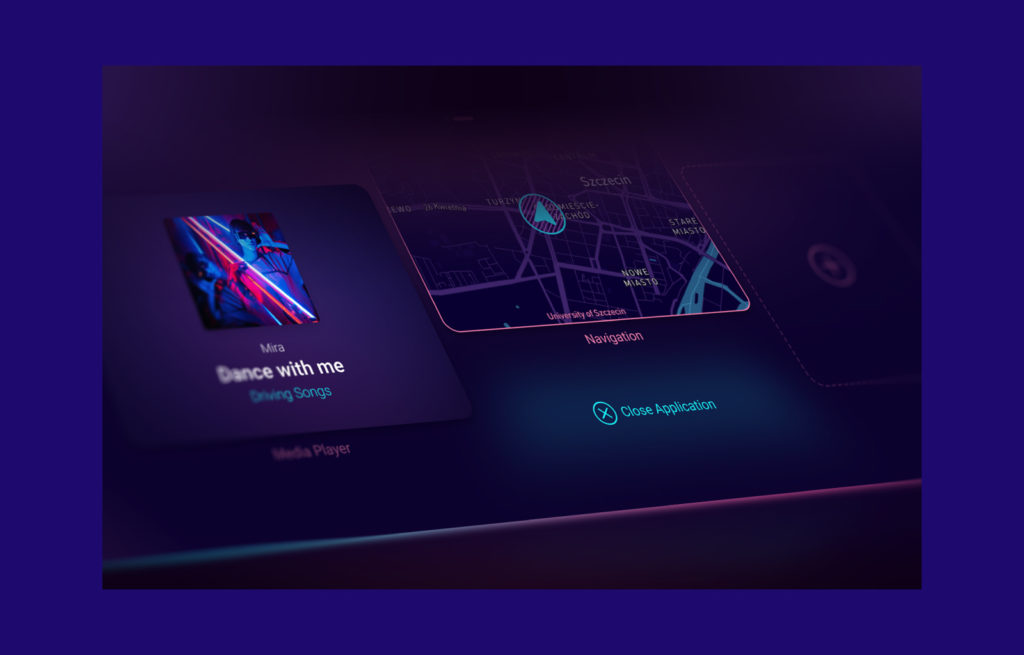

MicroHMI borrows the microservices idea from enterprise IT. Instead of one massive HMI, you create a set of lightweight, self-contained micro-applications, each responsible for a specific function: climate control, media, navigation, settings, or vehicle status.

Each MicroHMI module:

- Has a single responsibility and clear boundaries;

- Communicates through versioned interfaces or an event bus;

- Can be tested, updated, or replaced without touching the rest;

- And runs as its own process or container.

The benefits are immediate: faster prototyping, easier parallel work, and reduced regression risk. For large OEM programmes, it also improves supplier collaboration – different teams can own separate features while maintaining system consistency through shared APIs.

We believe that MicroHMI is how you conquer cockpit complexity.

Android Automotive as the natural host

AAOS supports this architecture natively. Each function can be packaged as an independent Android app or service, signed and updated separately. The Vehicle HAL exposes signals from the car, and Android’s robust permission model keeps modules secure.

Android Automotive also enables:

- OTA updates for continuous improvement;

- Google Automotive Services integration (Assistant, Maps, Play);

- Multi-user profiles;

- Hardware abstraction across platforms such as Qualcomm SA8155P or other automotive SoCs.

For OEMs, that means faster time-to-market and a stable base for innovation. No need to reinvent frameworks for audio, navigation, or connectivity.

From modularity to adaptability

Breaking the HMI into micro-modules is only half the story.

The real opportunity lies in making the cockpit adaptive. Drivers now expect the same conversational, context-aware behaviour from their cars that they get from their phones and smart speakers.

This is where Large Language Models (LLMs) enter the picture.

LLMs in the car: the shift from commands to conversation

Early voice control required strict phrasing: “Call John Smith”, “Set temperature to twenty-two degrees.” LLMs and modern natural-language processing (NLP) change that entirely.

A driver can now say:

“I’m freezing – can you warm it up a bit?”

“Find a coffee shop on the way.”

“Turn the music down, then navigate home.”

The assistant parses intent, understands context, and can chain multiple actions. It no longer matches keywords – it interprets meaning.

Inside the cockpit, that means:

- Conversational UX: drivers speak naturally, not like to a computer.

- Context-aware behaviour: the system knows current destination, cabin temperature, and driver preferences.

- Task chaining: one utterance can control several functions.

LLMs make the HMI multimodal, combining voice, touch, and gesture in a seamless interaction model.

The multimodal pipeline

To make this possible, an automotive HMI integrates several layers of AI and software logic.

- Wake word and Voice Activity Detection (VAD): always-on but low-power listening for the activation phrase.

- Automatic Speech Recognition (ASR): converts speech to text.

- NLU or LLM interpretation: extracts intent, entities, and context.

- Dialogue manager: maintains state across turns.

- Action orchestrator: maps intent to a MicroHMI API (for example, Climate.setTemperature(zone, value)).

- Feedback: provides Text-to-Speech response and UI update on the relevant screen.

Within this architecture, the MicroHMI modules act as executors of actions. The voice system doesn’t need to know how to adjust the climate or play music, it just calls the right module interface.

This decoupling is what makes LLM integration scalable. The AI assistant can evolve independently of the underlying HMI features, and vice versa.

Balancing cloud and edge AI

LLMs are large, but they don’t always need to run in the cloud. For privacy and latency reasons, many OEMs now deploy hybrid architectures:

- A lightweight on-device model handles common requests and wake-word detection

- A cloud model handles complex, open-domain queries when connectivity allows

This design ensures critical functions remain available offline, while advanced conversational capabilities are delivered when connected. It also gives OEMs flexibility to comply with regional data-protection laws such as GDPR.

Our engineers frequently combine this hybrid approach with context caching and intent confidence thresholds – the system decides dynamically whether to execute, ask for confirmation, or escalate to a cloud query.

Functional safety and quality by design

No matter how intelligent an assistant becomes, the automotive environment demands reliability and compliance.

With this in mind, we embed functional safety and process maturity directly into the HMI lifecycle:

- ISO 26262 for functional safety (ASIL levels A–D)

- Automotive SPICE for process capability

- IEC 61508 and ISO 14971 for risk management and safety-related software

The Wavey platform integrates Squish GUI Tester in a Docker-based CI/CD pipeline, ensuring that every code change triggers unit, smoke, and regression tests. GitLab automation validates modules continuously – a practice essential for maintaining quality across distributed teams.

By the way, explore the Wavey platform and learn how modular architecture, automated testing, and AI integration can transform your approach to automotive UX.

Automated testing isn’t just about efficiency. It’s about trust. When a car’s HMI controls critical functions, confidence in each release matters as much as innovation.

Designing for scalability and collaboration

A MicroHMI architecture supports true parallel development. Design and engineering teams can work independently yet synchronise through shared contracts.

For instance:

- The UI/UX team designs the visual flow for a navigation tile

- The development team implements it in Qt/QML

- The QA team writes Squish scripts that test the module in isolation

All three can operate simultaneously. Continuous integration merges their outputs into a testable whole each night.

Spyrosoft often uses this structure in complex cockpit programmes, allowing multiple suppliers or in-house teams to deliver features without constant dependency management.

This modularity also simplifies variant management. The same set of MicroHMIs can be combined differently across model lines – for example, a premium trim might add 3D visualisation via Unity, while an entry version reuses the same base modules without it.

Voice, gesture, and touch: a multimodal experience

MicroHMI and AI together unlock truly multimodal interaction.

- Touch remains ideal for visual tasks: map exploration, detailed settings, media browsing

- Voice takes over during driving, when eyes-on-road is paramount

- Gestures add a natural, glance-free option for simple actions such as accepting a call or skipping a track

AI ties these modes together. Context recognition prevents conflicts – for instance, the system pauses gesture input when it detects voice interaction to avoid ambiguity.

This interplay creates an experience closer to human conversation than to computer control. It’s not just another feature. It’s a step towards cognitive interaction design, the car adapting to the driver, and not the other way round.

Context management and personalisation

An effective in-car assistant remembers context across four layers:

- Session: what was said in this interaction.

- User: personal preferences and habits.

- Vehicle: current state – speed, temperature, route.

- Application: which HMI module is active.

Combining these layers allows natural follow-ups. A driver can say, “Navigate to the nearest charger”, then immediately, “Avoid motorways”, and the system understands.

To maintain privacy, sensitive context is stored locally and synchronised selectively. A local dialogue manager filters what is shared to the cloud, anonymising identifiers where possible.

The goal is clear: make the car helpful without being intrusive.

Performance and optimisation

Real-time responsiveness is crucial. LLMs and voice models must fit within the strict latency budget of a moving vehicle.

Optimisation techniques include:

- Model quantisation and distillation to reduce size;

- GPU or NPU acceleration on automotive SoCs;

- Asynchronous pipelines to keep UI threads free;

- Dynamic throttling to balance performance and energy use.

The aim is for the system to acknowledge a command within about half a second, so it’s fast enough to feel instantaneous, yet reliable enough for safety-critical contexts.

To assure this, we monitor latency and success rates as key UX metrics alongside traditional KPIs like frame rate or boot time.

Security, safety and user trust

Integrating AI in a vehicle raises natural questions about safety and data handling. Spyrosoft designs HMIs to comply with privacy regulations and driver-distraction standards from the start.

Best practices include:

- Processing audio locally wherever possible;

- Requiring confirmation for critical actions;

- Providing clear visual indicators when the microphone is active;

- Allowing users to mute or delete data;

- Following UNECE R155/R156 cybersecurity guidelines.

Building trust also means designing transparent behaviour. If the system mishears, it asks. If a request seems unsafe, it politely declines.

This isn’t only good UX. It’s compliance in action.

From proof of concept to production

At Spyrosoft, we put these ideas into practice with Wavey, our in-house gesture-controlled IVI cockpit built on a Qt-based MicroHMI architecture for Android Automotive.

Each feature – navigation, media, HVAC – exists as a standalone micro-application. Automated Squish tests validate every build in Docker containers. Gesture and voice inputs feed into the same backend APIs, demonstrating the modular design’s flexibility.

Wavey shows how quickly a production-ready cockpit can be assembled when architecture, AI and testing are aligned. The result is a ready-to-deploy automotive IVI and cluster platform that’s both functional and brandable.

Common pitfalls and how to avoid them

When adopting MicroHMI and LLM-based interaction, teams often face recurring challenges:

- Over-reliance on the cloud: always provide offline fallbacks.

- Unclear module boundaries: define ownership and interfaces early.

- Lack of automated testing: every module should have its own CI pipeline.

- Ignoring real-world noise: test voice and gesture in actual cabin conditions.

- Privacy by afterthought: bake data-protection design into the first sprint.

Spyrosoft’s experience across OEM and Tier-1 projects shows that early architectural discipline prevents months of rework later.

The road ahead

As vehicles become truly software-defined, the cockpit will be the user’s primary touchpoint, a fusion of safety system, information hub, and digital companion.

MicroHMI architecture provides the structure.

LLMs and AI provide the intelligence.

Android Automotive provides the ecosystem.

Together, they enable HMIs that are modular, adaptive, and continuously improving – essential traits for next-generation vehicles.

Working with us

Delivering such systems requires more than technology. It takes experience.

Our teams combine deep HMI engineering expertise with a practical understanding of automotive safety, testing, and AI integration.

Our competencies include:

- Qt/QML and C++ HMI development;

- Android Automotive OS integration;

- Squish and CI/CD automation;

- Functional safety (ISO 26262, ASPICE);

- On-device and cloud AI / LLM integration;

- Gesture and voice multimodal UX design.

Whether you’re developing a new cockpit from scratch or enhancing an existing platform, we can help you:

- Define a scalable MicroHMI architecture;

- Integrate voice and AI assistants safely;

- Automate testing and compliance;

- Accelerate time-to-market with our ready-made frameworks.

Let’s talk about your next cockpit

Spyrosoft’s HMI development services empower OEMs and Tier-1s to design, test and launch intelligent, brandable in-vehicle systems built for the software-defined era.

Have a look at our HMI solutions or get in touch with our HMI Director and discuss your HMI ideas.

About the author

CONTACT US