EU AI Act: what changes does it bring to the chemical industry?

The European Union recently introduced a comprehensive regulatory framework for artificial intelligence, the AI Act. These regulations ensure that AI technologies are safe, transparent, and fair. For companies involved in chemical production, certain AI systems are classified as “high-risk” and require specific considerations to meet the requirements of the AI Act. This article will help you understand which AI applications fall under the high-risk category, why they are considered high-risk, and how to manage them effectively.

What is the EU Artificial Intelligence Act, and who does it affect?

The Artificial Intelligence Act (also known as the EU AI Act) is a European Union-wide legal framework that sets transparency and reporting obligations for AI systems in the EU market or those which affect EU users. It applies to companies placing AI systems on the European Union market or whose outputs are used within the EU countries, regardless of development or deployment location. The EU AI Act has entered into force on the 1st of August 2024.

The aim of the EU Artificial Intelligence Act

The EU AI Act aims to create a comprehensive legal framework that regulates the development, deployment, and use of artificial intelligence (AI) in the European Union. The EU AI Act seeks to strike a balance between protecting citizens from the potential risks of AI while fostering innovation and maintaining the EU’s global leadership in ethical technology regulation. It considers aspects such as ensuring safety and fundamental rights, establishing trust in AI, promoting innovation, preventing fragmentation of AI regulation, ensuring accountability and transparency, and positioning the European Union as a leader in AI governance.

Four risk levels defined in the EU AI Act

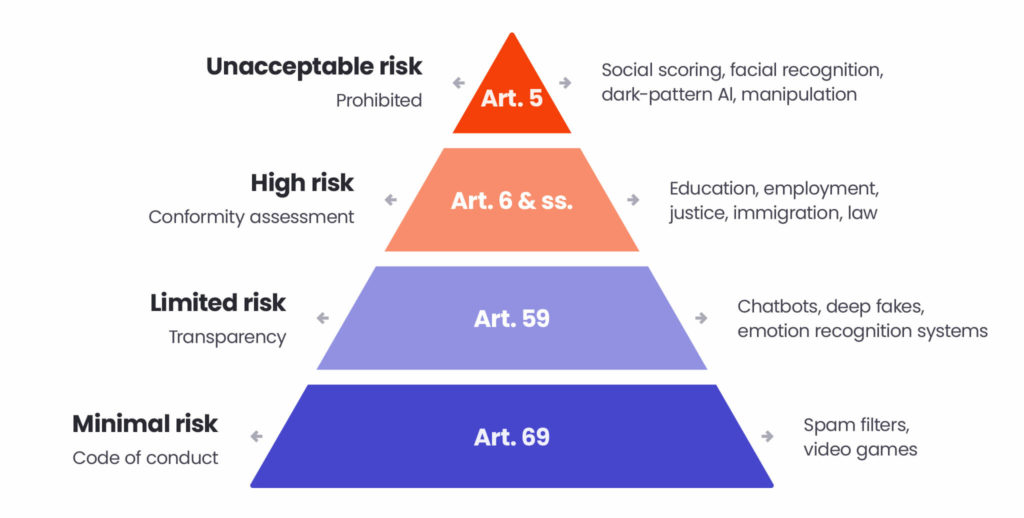

The EU AI Act establishes a risk-based approach to regulating AI systems, categorising them into four levels of risk based on the potential harm they pose to individuals or society:

- Unacceptable risk: AI applications that are banned as they are deemed to pose significant threats to safety, fundamental rights, or democratic values. Those may be AI systems used for social scoring by governments, manipulative AI for exploiting one’s vulnerabilities, or real-time biometric identification in public spaces.

- High risk: AI systems in critical sectors that can significantly impact safety, fundamental rights, or vital services; for example, AI for medical devices, law enforcement systems, transport safety, or hiring algorithms. Those AI-based applications are subject to strict requirements such as rigorous risk assessments, transparency measures, human oversight, and data governance.

- Limited risk: AI systems that don’t pose direct risks to safety or fundamental rights but still require transparency obligations. The user must be informed that they are interacting with artificial intelligence, for example, when using AI chatbots or artificial intelligence-powered customer service systems.

- Minimal or no-risk: Many AI applications that don’t impact individual’s rights or safety fall into this category and don’t require rigorous oversight or regulation. Those can, e.g., be spam filters or AI used in video games.

Penalties for non-compliance with EU AI Act

Non-compliance with the EU Artificial Intelligence Act can result in significant financial penalties, which are structured according to the severity of the violation:

- Up to 7% of global annual turnover or €35 million (whichever is higher) for non-compliance with prohibited AI activities.

- Up to 3% of global annual turnover or €15 million (whichever is higher) for most other violations.

- Up to 1.5% of global annual turnover or €7.5 million (whichever is higher) for providing incorrect, incomplete, or misleading information to notified bodies or national authorities.

Check our artificial intelligence solutions for chemical companies

Learn moreHow is artificial intelligence used in the chemical industry?

Artificial intelligence is revolutionising industries, and chemical production is no exception. From optimising production schedules to predicting equipment maintenance needs, AI has become a powerful tool that enhances efficiency, safety, and quality control. However, not all AI applications are created equal – some carry more inherent risks, and these high-risk applications must be closely monitored to ensure compliance and safety.

In chemical production, AI can be used in several key areas:

- Process optimisation: AI algorithms can analyse chemical reactions to maximise output while minimising the use of raw materials and energy.

- Predictive maintenance: Machine learning models help predict equipment failures before they occur, reducing unplanned downtime and maintenance costs.

- Quality control: AI-powered systems can assess product quality in real time, reducing waste and improving consistency.

- Safety monitoring: AI systems can monitor worker safety, identify potential hazards, and alert personnel when intervention is required.

- R&D formulation development: AI can assist in designing new chemical formulations by analysing complex datasets to identify optimal combinations of ingredients and predict performance outcomes.

Assessing the AI risk level of chemical applications

The EU AI Act categorises certain AI applications as high-risk due to their potential impact on worker safety, environmental integrity, and operational reliability. In chemical production, high-risk AI applications include systems that directly influence:

- Worker safety: AI systems that monitor chemical processes or employee behaviour are considered high-risk, as errors could lead to unsafe working conditions.

- Environmental risk: AI models used to control emissions, waste, or hazardous materials must be accurate to prevent potential environmental harm.

- Operational reliability: AI systems that optimise complex chemical processes must be robust and subject to stringent oversight, as even minor errors could lead to production disruptions or dangerous incidents.

Example 1: old-fashioned software vs. AI systems in chemical production

To better understand the risks and benefits of AI, let’s compare a traditional, non-AI software system with a modern AI-driven solution.

Old-fashioned software: In traditional chemical production, process optimisation was handled by rule-based software. This software followed pre-defined parameters to manage production. For instance, if the temperature of a chemical reactor went beyond a certain threshold, the software would trigger an alert or shut down the process. While effective, these systems were rigid and could not adapt to changing conditions or predict issues before they arose.

AI-driven software: An AI-driven system, by contrast, can continuously learn from data and optimise processes in real time. For example, it can predict temperature fluctuations before they occur and make adjustments to prevent a shutdown. While this adaptability offers significant benefits, it also brings new risks, such as incorrect predictions or biased training data leading to unsafe conditions.

Initial risk assessment and compliance: Let’s assume a company implements an AI-driven predictive maintenance system that monitors the health of chemical reactors. The system uses historical data to predict maintenance, reducing downtime and improving efficiency. During the initial risk assessment, the system is classified as low-risk because it primarily assists maintenance personnel without directly controlling critical processes. The company conducts compliance checks, and the system passes without major issues.

Modification to high-risk system: Now, consider modifying this system: instead of merely predicting maintenance needs, the AI system is given direct control to shut down equipment when it detects a potential issue. This change significantly increases the risk level because the AI now has direct influence over critical operations. A malfunction or incorrect prediction could lead to an unsafe shutdown, resulting in potential safety hazards or production losses. As a result, the system is now classified as high-risk.

Example 2: quality control in chemical production

Old-fashioned software: Traditionally, quality control in chemical production was carried out through manual sampling and rule-based software that used fixed thresholds for detecting product deviations. Operators would manually inspect samples at regular intervals, and the software would flag any inconsistencies that exceeded the set parameters. Although this method worked, it was labour-intensive and sometimes too slow to prevent significant waste.

AI-driven software: An AI-based quality control system can analyse data from sensors in real time, detecting subtle deviations in product quality that traditional methods might miss. It can even predict which batches are at risk of failing quality standards and recommend adjustments to the process to ensure conformity. However, this comes with risks such as over-reliance on AI decisions or incorrect training data, leading to faulty predictions.

Initial risk assessment and compliance: Suppose the AI-driven quality control system assists operators by providing recommendations. It is initially classified as low-risk since human operators still make the final decisions. Compliance checks show no major issues, and the system is deployed.

Modification to high-risk system: Consider a scenario where the AI system is automatically modified to reject or adjust batches without human intervention. This shifts the responsibility from human operators to AI, creating a high-risk situation. Incorrect AI decisions could lead to substantial financial losses or safety concerns if non-conforming products are mistakenly approved or safe products are rejected. The system now needs to be classified as high-risk.

Example 3: R&D in formulation development

Old-fashioned software: In traditional R&D for formulation development, researchers used rule-based methods and manual experimentation to determine the best combination of ingredients for a given product. This was a time-consuming process requiring a lot of trial and error, and often, the insights gained were limited by human interpretation of experimental data.

AI-driven software: An AI-based formulation development system can analyse vast datasets, including ingredient properties, experimental results, and performance metrics, to recommend the best combinations for optimal product performance. The system can rapidly identify trends and propose new formulations, significantly speeding up the R&D process. However, it also introduces risks such as the reliance on biased training data, which could lead to suboptimal or even hazardous formulations.

Initial risk assessment and compliance: Suppose the AI-driven R&D system assists researchers by providing formulation recommendations. Initially, it is classified as low-risk since human experts still make final decisions. Compliance checks show no significant issues, and the system is implemented.

Other modifications to unacceptable risk: Consider another modification: the AI system is altered to preferentially recommend products and ingredients from a single supplier, potentially due to limited training data or commercial agreements. This bias reduces the diversity of possible formulations, leading to biased and suboptimal decisions. As a result, the AI may overlook better or safer alternatives, increasing the likelihood of hazardous outcomes and compromising product quality. Therefore, the system would need to be classified as an unacceptable risk.

Summary table of differences

Security standards for high-risk applications

To comply with the AI Act, companies must ensure that high-risk AI applications meet strict regulatory standards. This involves implementing a proper risk management system, understood as a continuous iterative process planned and run throughout the entire lifecycle of a software, documentation, testing for fairness, and mitigating biases in AI systems. Furthermore, the AI Act emphasises the importance of human oversight in AI decision-making processes.

Key steps to manage AI risks in chemical production include:

- Risk assessment: Identify all AI systems used and evaluate their potential risks.

- Compliance checks: Ensure that AI systems meet EU regulatory requirements, particularly those classified as high-risk.

- Monitoring and testing: Continuously monitor and test AI models to ensure they function as intended and do not introduce unforeseen risks.

- Human oversight: Assign human operators to oversee high-risk AI decision-making. Human intervention is crucial to prevent potentially catastrophic outcomes, especially in safety-critical situations.

Get your company EU AI Act-compliant with Spyrosoft support

Spyrosoft is a consulting-led technology services provider specialising in software development and the application of Artificial Intelligence accelerators. Our domain specialists in the chemical industry will help you prepare your company to be compliant with the EU Artificial Intelligence Act, conducting activities in three stages:

- Comprehensive AI system registration. Assistance in creating a complete inventory of your AI systems, including both in-house and third-party solutions, that will enable quick risk categorisation once the AI Act is implemented in country regulations of EU member states.

- AI risk management strategy. Guidance in developing or selecting governance workflows to identify, manage, and mitigate AI risks – a crucial requirement for high-risk AI systems under the EU AI Act.

- Technical documentation support. Selection or creation of appropriate tools for compliance tracking, ensuring AI systems meet all relevant requirements of the Act.

If you seek support in implementing the AI Act’s requirements in your company, contact us via the form below or learn more about our offer for chemical companies. We will answer your questions and guide you through whole process.

About the author

CONTACT US