Head of AI, Tomasz Smolarczyk on the future of AI

After the first part of the interview with our Head of AI, Tomasz Smolarczyk, published a few months ago, we’re back discussing sustainability and the future of AI.

How will Artificial Intelligence evolve in the next few years?

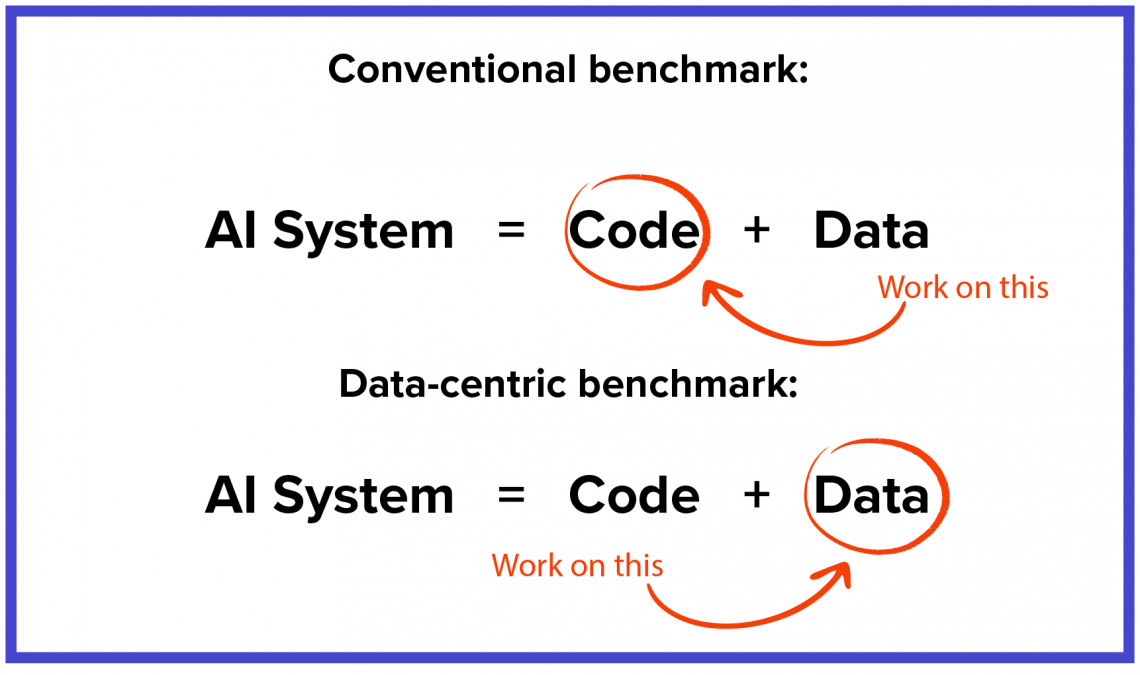

At the organisational level, there’s an eminent change in the approach to AI and a greater emphasis on data that is being collected and prepared correctly in order to be further processed. Companies are now understanding that AI is not only an algorithm, but also the data that stands behind it. To receive better results, the focus is now switching from algorithm and code tuning to a more holistic approach on how to design the high-quality data collection and processing system as a whole, so it would be easier to train better models. There’s also larger pressure on hiring for data engineering and MLOps roles as organisations are gradually more aware of the fact that these roles have a huge impact on whether AI projects will be successful.

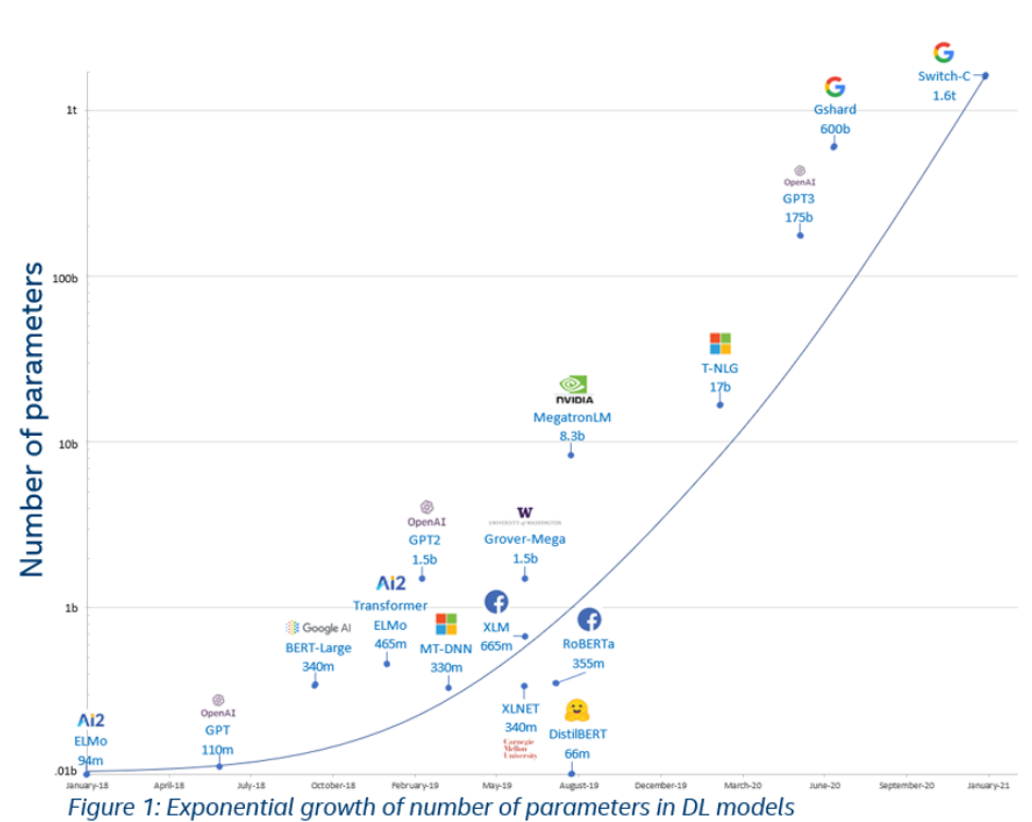

When it comes to the research area, there’s an increase in trends related to designing more and more advanced deep neural network architectures that uses large data sets and powerful computational clusters. Just look at what happens with AI language models – the number of parameters in these models grows exponentially. Google has already presented their model in Switch Transformer architecture that has 1.6 trillion parameters – 6 times more than the previous large model GPT-3 from OpenAI.

Another interesting trend is self-supervised learning, where random unlabelled examples are used to train a model. In April 2021, Facebook published their DINO model that has achieved better results using these mechanisms with more accurate prediction in image processing tasks, than would be possible using standard supervised learning techniques.

We can also see how deep neural networks are employed for solving problems that could not be resolved using traditional methods. One of the most striking achievements in this area comes from DeepMind. They created AlphaFold 2, an algorithm for predicting how proteins fold up to 90 Global Distance Test (GDT). Their project has recently won a competition that has been running since the ‘90s, where researchers from all over the world guess what the structure of new protein sequences will look like. It is considered that breaking 90 GDT is comparable with results obtained from experimental methods.

How is the explainable AI evolving right now?

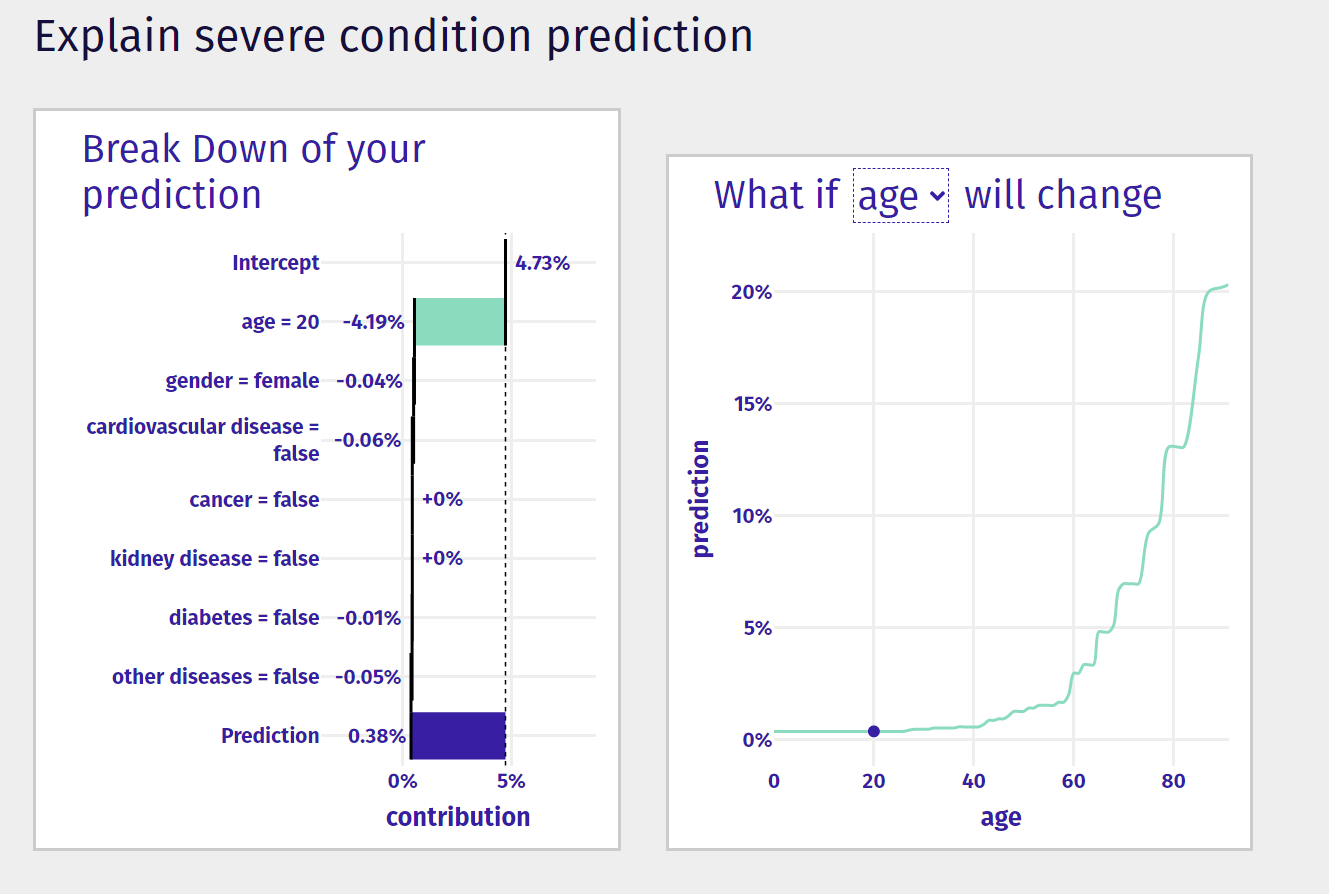

A perfect example of how the explainable AI is evolving right now is a model from MI2 DataLab and MOCOS. On their website, you can verify the prediction of how likely it is for a given patient to be severely ill or die from Covid-19, based on historical data. Using a form on their website, you can enter your age and accompanying diseases, and you get a more accurate prediction that’s presented clearly, and you can see what impacts the result of the model.

These techniques are more and more crucial because they can be used to expose bias in data and predictions. They, too, evolve to better diagnose and visualise bias, as some of the existing AI models can favour or become prejudiced against certain groups of people. Thanks to these bias exposing techniques, we can now create models that are more universal and explainable by adjusting how they work.

One of the examples of this bias is a Google translation where sentences in Turkish: ‘He/she is a nurse; He/she is a doctor’ were translated in English as ‘She is a nurse; He is a doctor’, and ‘He/she is lazy; He/she is hard-working’ as ‘She is lazy. He is hard-working’. This mistranslation has been fixed, and now the Turkish impersonal pronoun ‘o’ can be replaced with an appropriate pronoun in English automatic translations.

The current pandemic has been a trying period for AI – there was an increase in the number of tools that can be used for predicting how the pandemic will spread long-term.

At Spyrosoft, we have been working on such a project with financial support from the National Research and Development Centre where – together with U+GEO, a spin-off company from the Natural Science University in Wroclaw – using mobility data, we have been developing simulations for the spread of infectious disease. We are able to verify how closing shopping centres or restaurants will affect the spread. We can also test various scenarios that can be then used for making decisions about what actions to take with less impact on the economy.

Is the lack of computing power a blocker for developing AI?

Surely, it is as text analysis models are becoming more and more advanced, so technical requirements for these models are – and will be – bigger. It’s important to add here that hardware is also evolving, with smartphones now being equipped with units exclusively for neural networks and prediction models for certain tasks. Just to give an example, the Apple A14 processor used in the iPhone 12 has special hardware for neural networks called the 16-core Neural Engine. It can process 11 trillion operations a second. It’s an unimaginable number of operations! These technologies are now being used in smaller and smaller devices, so it’s not only large server rooms that get upgraded.

How can AI help solve climate crisis issues, and how can it be used for limiting the use of resources?

AI can, and is already, used for tackling climate crisis issues worldwide. One of the examples is an algorithm that allows you to create a video showing how you would look in certain clothes, so you don’t have to order them from an online store. I’ve seen a tool like that recently, and unlike previous ones, videos created in this tool are high-quality, and it easily could be used in the ecommerce sector. This would allow for fewer online orders and fewer returns which also means fewer resources used and a more ecological approach.

The United Nations formed a list of 17 global goals for sustainable development, and one of them is tackling the climate crisis. Within this challenge, several AI-based projects have been set up, with one of the examples being a whale tracking tool that’s based on Machine Learning models and can automatically detect where these animals are located.

Another example of how AI can be used for inspiring action and be a force for good is by using data to confirm hypotheses and break the myth that the world is becoming a worse place to live. Data-based books such as ‘Enlightenment Now’ by Steven Pinker or ‘Factfulness’ by Hans Rosling present a unique perspective on the world, demonstrating that people are living longer and in better conditions.

Is there still a focus on developing general AI?

There are still research groups that are working on this, but I don’t think that reaching a point where general AI exists is possible in the next few years. The current situation with the Covid-19 pandemic has not changed that, but on the other hand, nothing that could prove these groups wrong has happened so far.

Is achieving general AI possible at all?

As I’ve mentioned above, we don’t have any proof that it is or is not possible. We only know that it will not happen as quickly as previously thought [laughs].

Some media were quick to proclaim the GPT-3 model prepared by OpenAI a SkyNet of the future (which is a reference to the Terminator series), but this model has not shown any signs of basic language understanding so far. Its results are impressive, but it doesn’t make general AI any more achievable. For example, a human being understands that in the sentence ‘The corner table wants another glass of water’, it isn’t an actual table (an item of furniture) that needs some water, it’s a person that sits at the table in the corner. Existing models are not able to think this way.

About the author